Design Gateway Hot! News

December 2024 (2)

|

View on Web Design Gateway Hot! News December 2024 (2) |

| Driving Scalable AI Infrastructure with FPGA-based TCP Offload Engine and 200G/400G Ethernet .jpg) |

|

Learn more about TOE200G-IP for Altera | Learn more about TOE200G-IP for AMD |

|

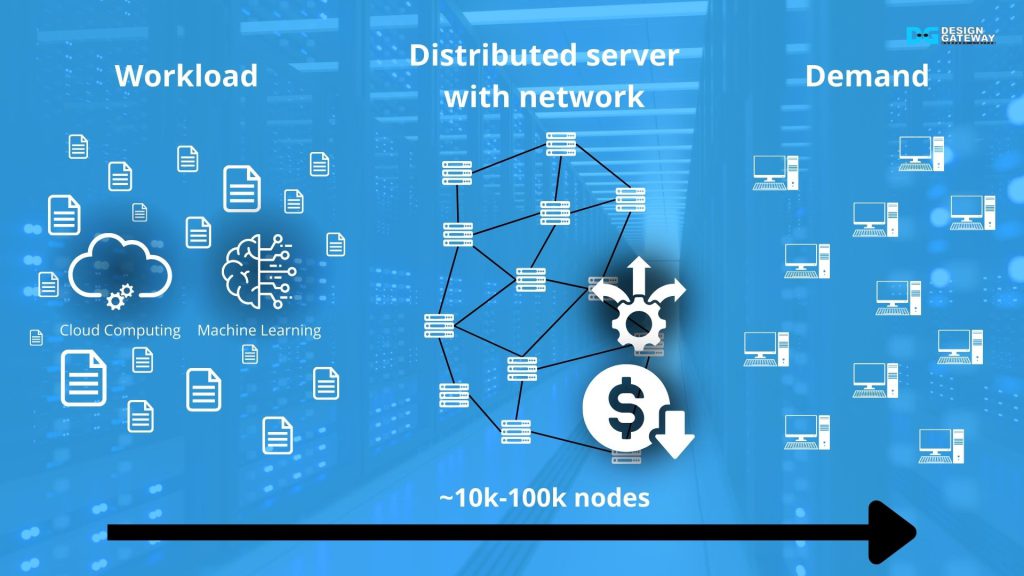

The Rise of AI: Performance Demands Are GrowingArtificial Intelligence (AI), Machine Learning (ML), and High-Performance Computing (HPC) continue to push the boundaries of connectivity in modern data centers. Hyperscale infrastructures require solutions that deliver high bandwidth, low latency, and reliability without compromising cost-efficiency. As AI applications grow, the need for scalable, affordable communication becomes critical to balance performance and infrastructure investment.

The Role of FPGA: Bridging AI Compute Nodes with 200G/400G EthernetFPGA technology bridges PCIe-based AI accelerators and Ethernet networks for seamless communication. Our TOE200GADV-IP Core delivers pure hardware-based 200G TCP offloading, eliminating software bottlenecks to maximize throughput. This enables cost-efficient, high-performance connectivity for AI workloads at scale with 200G/400G or beyond Ethernet speed. |

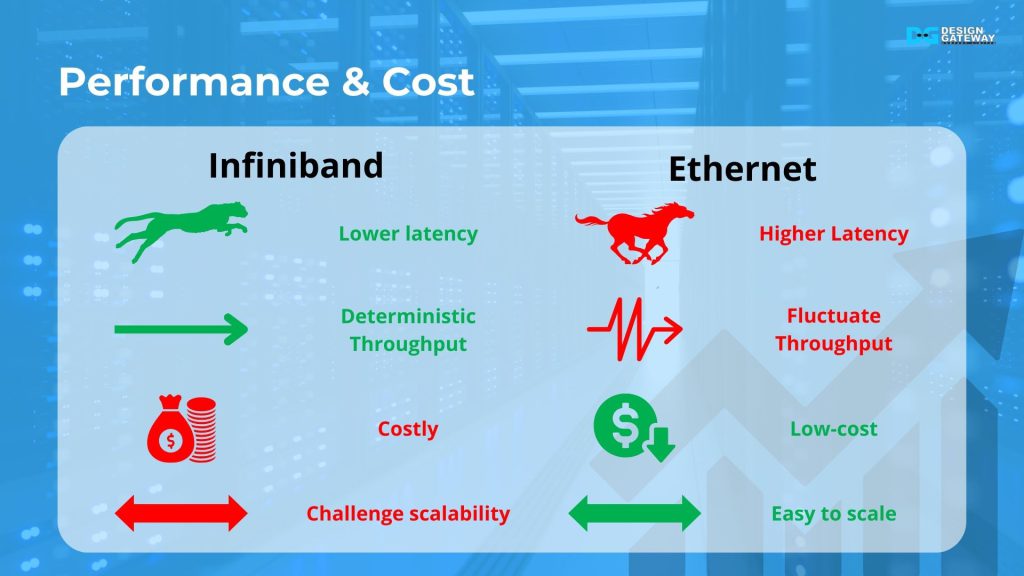

| Ethernet vs. InfiniBand: Balancing Cost and Performance While InfiniBand has long been recognized for its performance benefits in high-performance environments, it comes with significant cost and complexity. In contrast, Ethernet provides a widely adopted, cost-effective alternative with scalability and compatibility across existing data center infrastructure. |

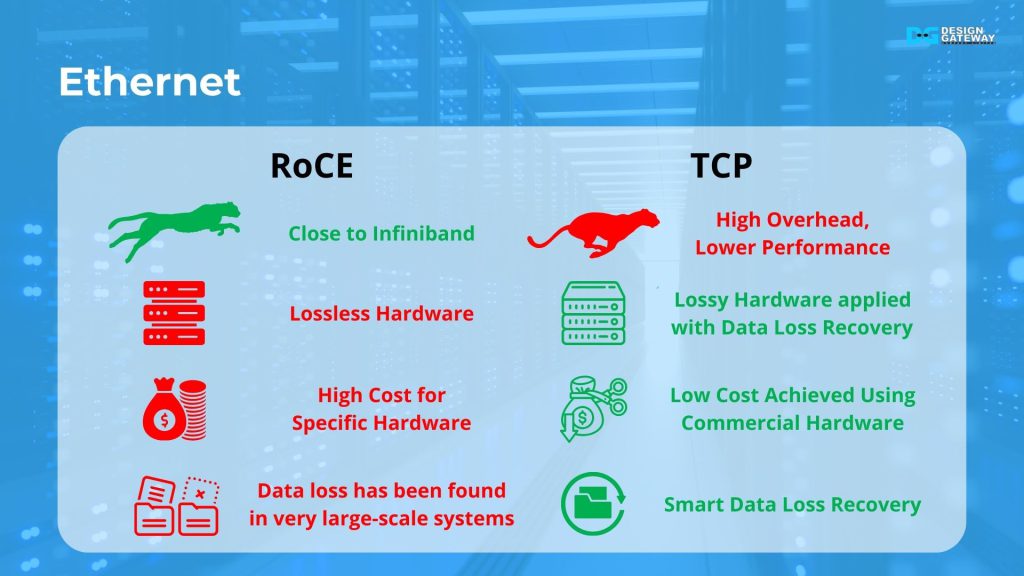

RoCE vs. TCP: Usability Matters RDMA over Converged Ethernet (RoCE) and TCP/IP both play a role in high-speed communication, but TCP brings unmatched flexibility and usability:

|

||

Click to show more detail |

Click to show more detail |

||

Our TOE200GADV-IP core further enhances TCP/IP performance, ensuring high-speed, reliable communication

without the need for additional software or network adjustments. Our TOE200GADV-IP core further enhances TCP/IP performance, ensuring high-speed, reliable communication

without the need for additional software or network adjustments.Lean more about TOE200GADV-IP |

|||

| Why FPGA & TOE200GADV-IP core Excel in AI Infrastructure The TOE200GADV-IP core delivers key benefits for AI-driven data centers:

Unlock the Power of Ethernet for AI InfrastructureAs the demand for AI accelerates, cost-effective, scalable connectivity is key. The TOE200GADV-IP core empowers hyperscale environments to achieve the performance and reliability needed for cutting-edge AI workloads—without breaking the bank. Contact |

|||

| TOE-IP core series YouTube Videos |

Economic Scalability: How Ethernet Transforms AI Data Center Connectivity YouTube Video | Blog Article |

Empower your 200GbE FPGA farms with FPGA-based TCP/IP YouTube Video | Blog Article |

| Subscribe to DG IP core YouTube channel |

|

Design Gateway Company Profile |

The latest information of DG IP cores are available on YouTube channel.

Watch DG IP core Videos

|

| DG | About Us | Privacy | Unsubscribe | Contact | (c) 2024 Design Gateway Co., Ltd. |