NVMeTCP Host Controller IP Core for 25 Gigabit Ethernet

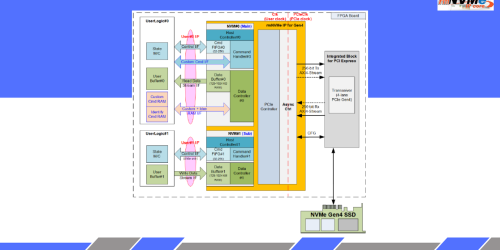

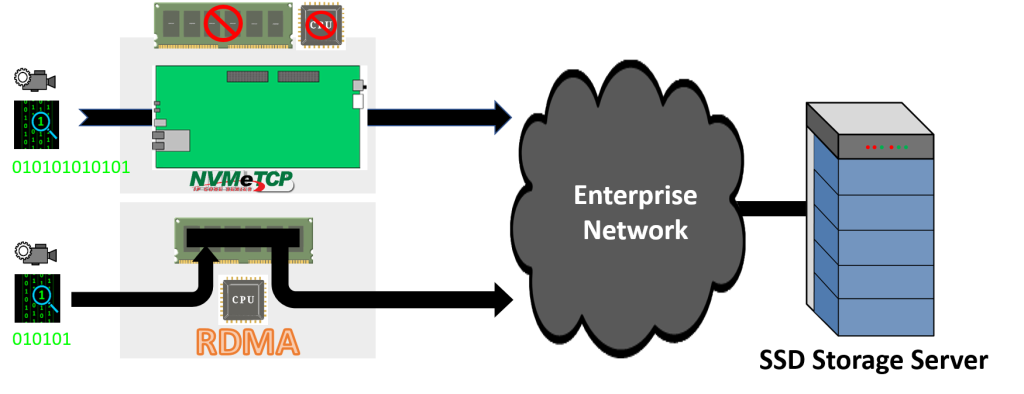

Most of you may wondering what’s the different between NVMe over TCP and NVMe over RDMA. Both are the same NVMe over Fabrics protocol but the key different is NVMe over RDMA is designed for data transfer between Host Main Memory and Storage. So you need to have a complete Host systems with CPU, OS, Device and specific hardware that support NVMe over RDMA.

While Our NVMeTCP 10G and 25G IP core is designed to fully handle NVMe over TCP protocol on Host side without CPU, OS and device driver. Which can reduce hardware resource and cost for adapting NVMe over Fabrics solutions on your existing network.

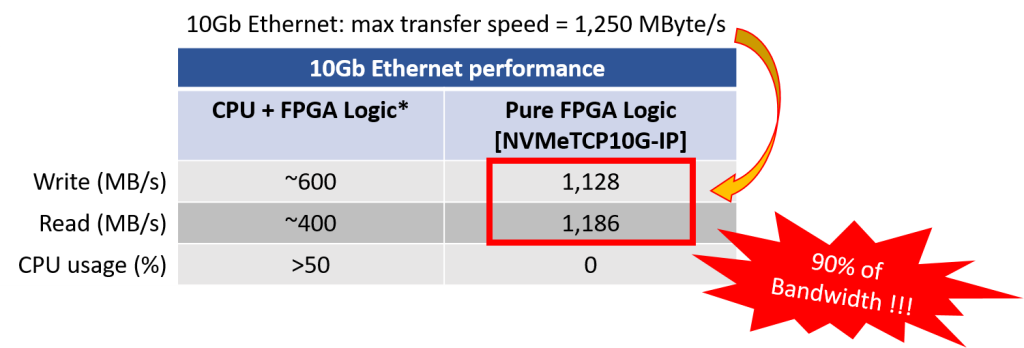

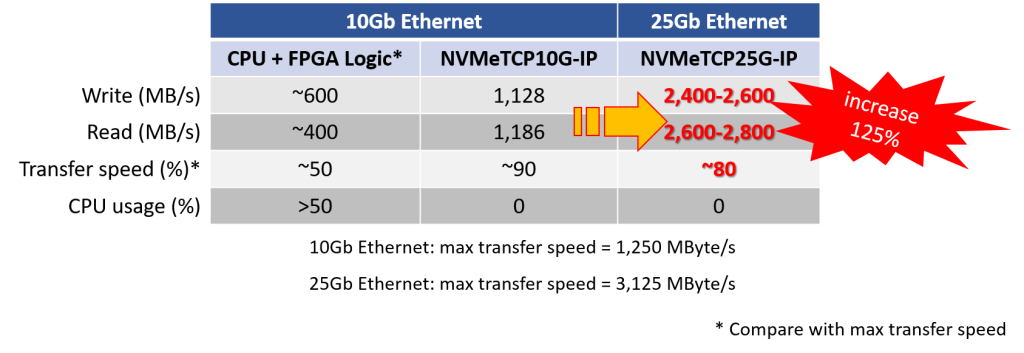

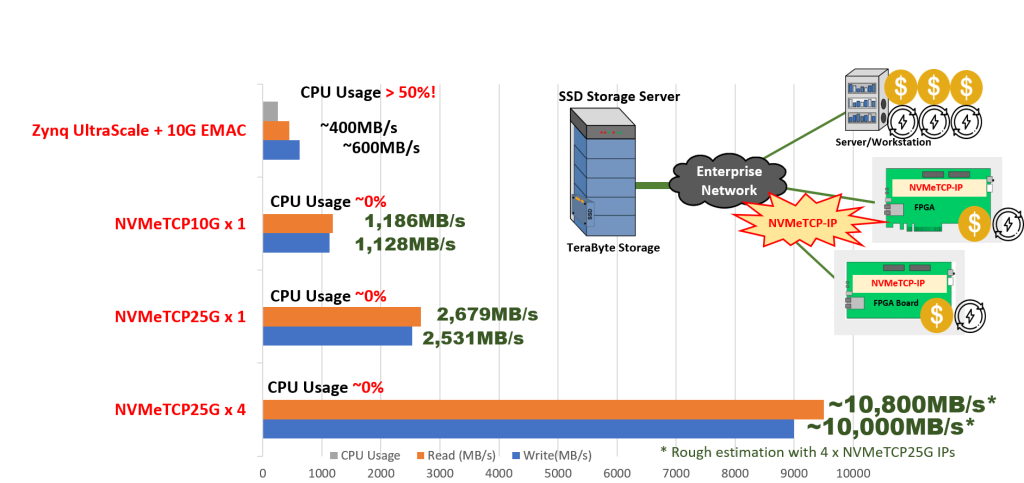

As you can see from the table with traditional solution normally consume CPU usage more than 50% and achieve performance just around 40~60%. While our IP core solutions can achieve close to maximum performance of 10G and 25G Ethernet speed with 0% CPU usage.

Comparing with maximum transfer speed. NVMeTCP 25G can achieve more than 2 times performance improvement over 10G.

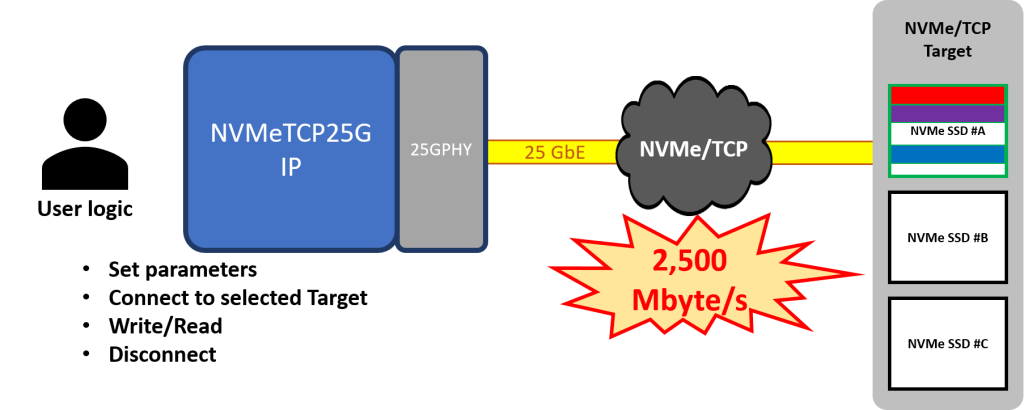

25Gigabit Ethernet is now the most cost-performance effective solutions for signal channel ethernet interface and suitable for 100Gigabit Ethernet connection by implementing 4 of NVMeTCP 25G IP core to work as 4 channel RAID Controller.

NVMeTCP IP core for 25G IP support 25Gigabit Ethernet speed. For Usage & Operation is same as 10G.

- First, Set network and transfer parameters

- Then connect to selected target server

- Perform read or write data transfer between simple User logic and IP. When data transfer completed, You can disconnect from current target.

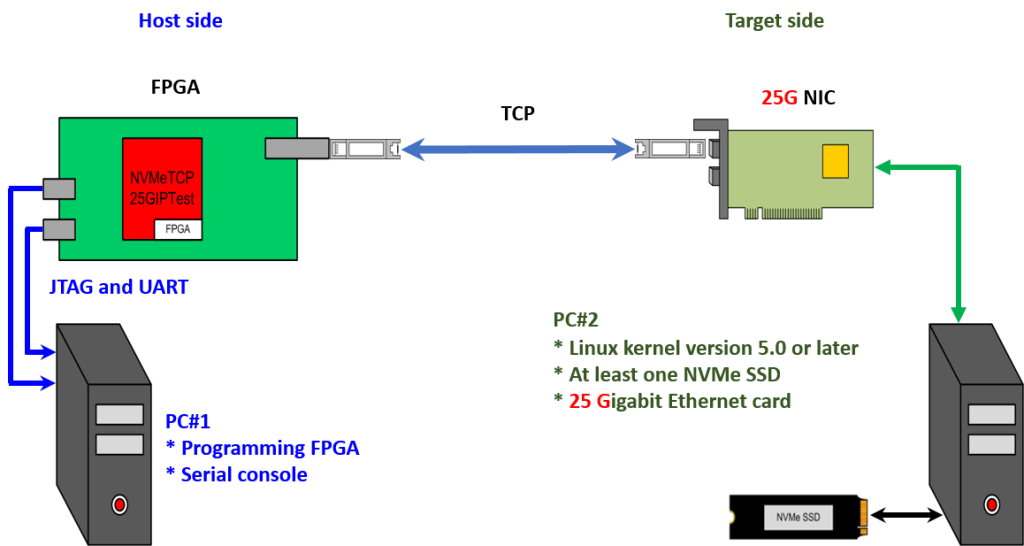

- Host PC #1 for demo operation and program the FPGA board

- Host PC #2 for NVMeTCP Target Server

- Ubuntu Linux Server

- Kernel 5.0 or later

- 25G Network Card

- NVMe SSD

- FPGA board with 25Gigabit interface. We choose Xilinx KCU116 Development Kit which include UltraScale+ device and GTY transceivers that can support 25G.

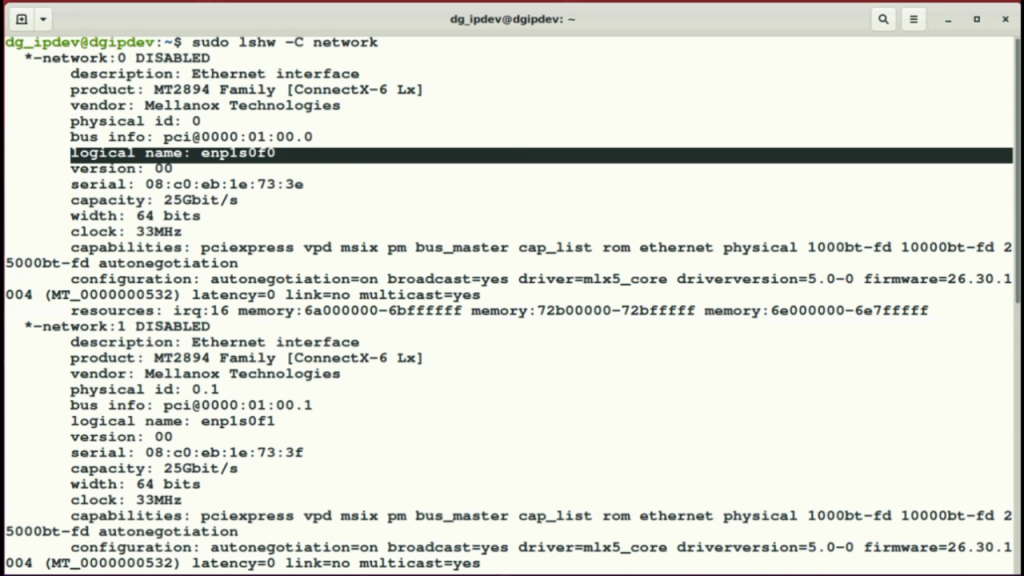

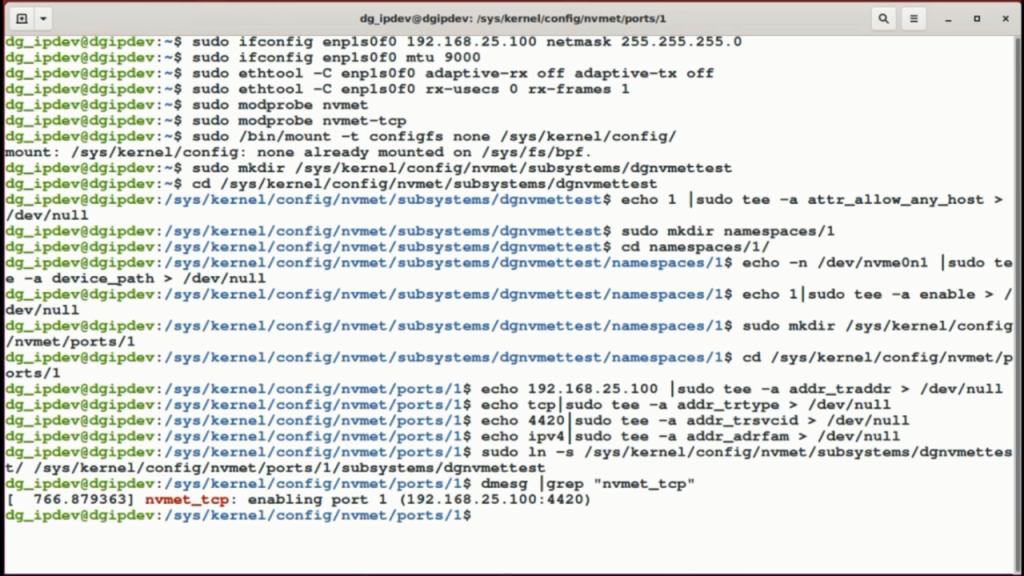

Before running the demo, we need to set up 25Gb Ethernet network card to get the best performance by following commands. The console shows logical name of Ethernet connection which connected to the NVMe/TCP host.

Then, set target IP address and subnet mask to the desired port of the Ethernet card. After finishing 25G Ethernet network setting, configure TestPC to be NVMe/TCP target. If the target is set successfully, target IP address and port number are printed

After finishing PC and FPGA setup, the welcome screen is displayed on FPGA console. we need to set IP parameters. Each parameter is verified by CPU. The parameter is updated when the input is valid. If all parameters are set, “IP parameters are set” is shown on the console and Connect command is available on the main menu

After that, NVMe/TCP connection between host and target is established. Once the host successfully connect with the target, “Connect target successfully” and the target NVMe SSD capacity are displayed with Write/Read/Disconnect command.

For write command, The host sends Write command with pattern data across Ethernet to the target for NVMe SSD writing. The tranfer speed is about 2424 MB/s.

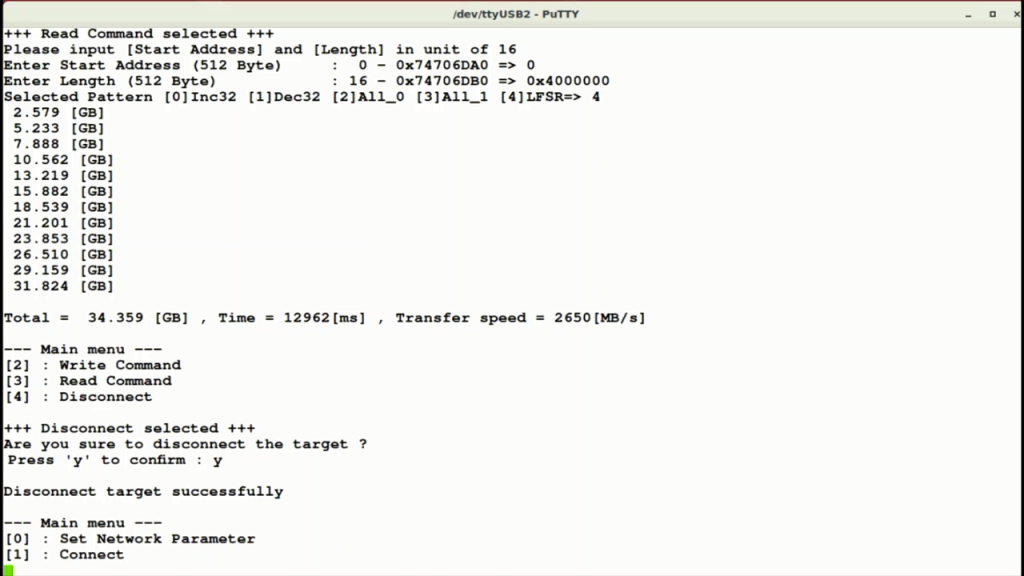

For read command, The host sends Read command across Ethernet to the target for NVMe SSD reading. The tranfer speed is about 2650 MB/s.

Let compare the solutions in term of performance, power consumption and cost. For CPU and OS based system like Zynq Ultrascale+ MPSoC device with 10 or 25Gigabit EMAC. TCP transfer performance bandwidth will be around 40% read and 60% write according to iperf benchmark result. This performance is less than 70% bandwidth of 10GbE or 25GbE but require more than 50% of CPU time to handle TCP protocol.

For our NVMeTCP IP Core, Performance is consistent without need CPU invention at more than 80% bandwidth. By implementing 4 instance of NVMeTCP25G IP, you can easily achieve close up 10GB/s transfer speed over ethernet without direct interface to NVMe SSDs.

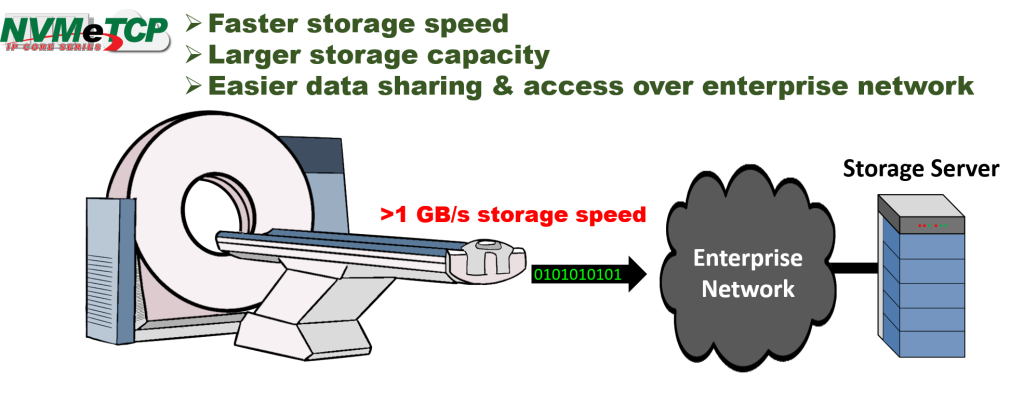

Now you can see that our NVMeTCP IP core solutions can help you to get access to NVMe Storage Server directly from your FPGA board through 10G or 25G ethernet interface.

You can easily upgrade your existing systems to get

- Faster storage speed

- Large storage capacity

- Easier data sharing and access over enterprise network