Super-resolution on KV260

The Kria KV260* is one of Xilinx edge devices designed to be suitable for Vision AI based applications.To implement customized AI applications on KV260, Xilinx Vitis AI** is used as a development stack to develop AI inference. It consists of optimized IP, tools, libraries, example designs, and free pre-trained models.

For super resolution application, Vitis AI model zoo provides a pre-trained model of the very deep residual channel attention networks (RCAN***), a popular image super-resolution (SR) architecture. This blog introduces steps to deploy the RCAN model on kv260 which are preparing xmodel and implementing on KV260.

Xilinx Vitis AI is used as a development stack which consists of optimized IP, tools, libraries, models, and example designs to develop AI inference on Xilinx hardware platforms. According to Vitis AI User Guide, this blog introduces steps to deploy RCAN model on kv260 which are preparing xmodel and implementing on KV260.

Preparing xmodel

(0) Test Environment

- Python3.7 and Pytorch 1.13

- Docker with Vitis AI 2.5

Installation instructions is provided as follow https://github.com/Xilinx/Vitis-AI#getting-started.

There are some different settings for CPU/GPU docker in the preparing xmodel step. Please follow these changes according to your docker version.

For Docker CPU version

- remove CUDA_HOME environment variable setting as follows:

unset CUDA_HOME - Pass argument

--cpuwhen running python script - Pass

map_location=torch.device('cpu')totorch.load()in python script - Pass

device=torch.device('cpu')toQatProcessor()in python script

For Docker GPU version

- Set

export CUDA_HOME=/usr/local/cuda - Set

CUDA_VISIBLE_DEVICES=0(according to ID of GPU in use)

(1) Prepare training data

- Download model from model zoo : pt-OFA-rcan_DIK2K_360_640_45.7G_2.5.zip

- Prepare a dataset

Download DIV2K dataset : https://idealo.github.io/image-super-resolution/tutorials/training/

Download benchmark : https://cv.snu.ac.kr/research/EDSR/benchmark.tar

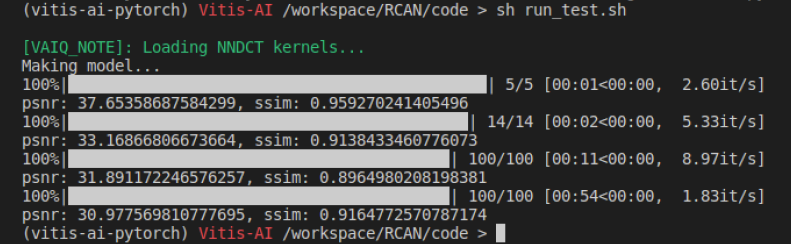

(2) Evaluate floating model

- Launch the docker image

- Install vai_q_pytorch as follow https://docs.xilinx.com/r/2.0-English/ug1414-vitis-ai/Installing-vai_q_pytorch

- Run

run_test.shshell script

Output

(3) Train model

To train the model with the DIV2K dataset, run run_train.sh shell script.

The model parameters can be adjusted by editing the run_train.sh as desired.--scale : scaling factor that matches your training dataset. In this case, scale is set to be 2.--n_resgroups : The number of residual groups. In this case, n_resgroups is set to be 1.--epochs :The number of epochs can be changed in order to decrease training time or increase PSNR. In this case, epochs is set to be 250.

(4) Quantize

The floating-point model is quantized and calibrated by using Vitis AI Quantizer, run run_qat.sh shell script.

Model parameter

--scale : In this case, scale is set to be 2.--n_resgroups : In this case, n_resgroups is set to be 1.--epochs : In this case, epochs is set to be 250.

After calibration, Model_0_int.xmodel are generated as DPU deployable model.

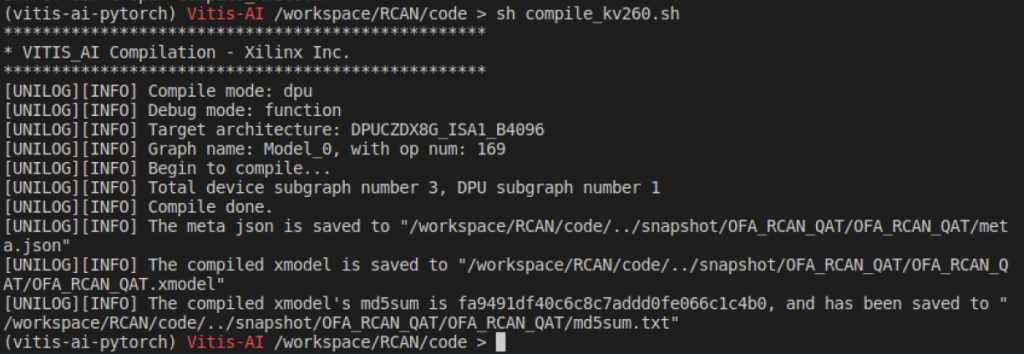

(5) Compile the model

Vitis AI compiler is used to compile the DPU deployable model by calling vai_c_xir as follows,

vai_c_xir \ --xmodel ../snapshot/OFA_RCAN_QAT/model/qat_result/Model_0_int.xmodel \ --arch /opt/vitis_ai/compiler/arch/DPUCZDX8G/KV260/arch.json \ --net_name OFA_RCAN_QAT \ --output_dir ../snapshot/OFA_RCAN_QAT/OFA_RCAN_QAT

OFA_RCAN_QAT.xmodel, md5sum.txt and meta.json are saved as the output of the compiling process. These files are used to implement the RCAN model on edge devices.

Implementing on KV260

(1) Set up the board

Download the SD Card Image (xilinx-kv260-dpu-v2022.1-v2.5.0.img.gz)

https://www.xilinx.com/member/forms/download/design-license-xef.html?filename=xilinx-kv260-dpu-v2022.1-v2.5.0.img.gz

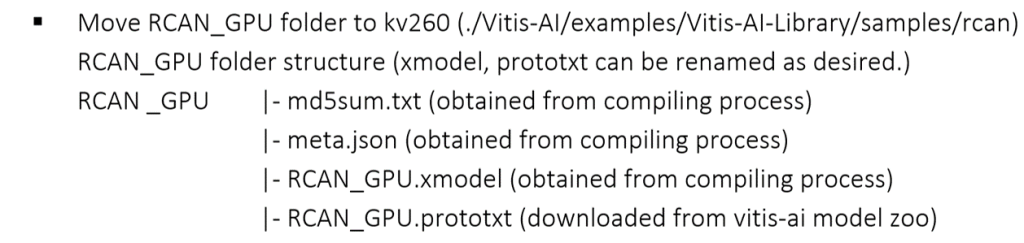

(2) Move model file to board

- Build RCAN model

[edge]# cd ./Vitis-AI/examples/Vitis-AI-Library/samples/rcan [edge]# bash -x build.sh

- Run

test_jpeg_rcan

Output image is saved at ./Vitis-AI/examples/Vitis-AI-Library/samples/rcan as 0_DG_sample_result.jpg

[edge]# ./test_jpeg_rcan RCAN_GPU DG_sample.jpg

Result

Input image: DG_sample.jpg | Result : 0_DG_sample_result.jpg |

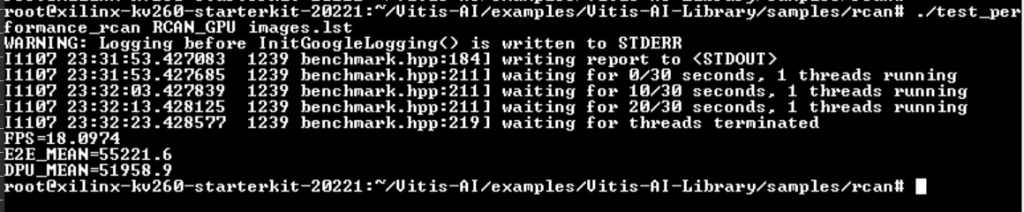

(3) Test Performance

- Send images.lst (list of image file names) and images folder to target

- Run test performance command

[edge]# ./test_performance_rcan RCAN_GPU images.lst

For more information, please refer to document UG1414 and UG1354 from Xilinx.

https://docs.xilinx.com/r/2.0-English/ug1414-vitis-ai/Vitis-AI-Overview

https://docs.xilinx.com/r/en-US/ug1354-xilinx-ai-sdk/Model-Samples

* For further information about the Kria KV260, please refer to https://www.xilinx.com/products/som/kria/kv260-vision-starter-kit.html

** For further information about the RCAN model, please refer to https://github.com/yulunzhang/RCAN

*** For further information of Vitis AI, Please follow https://dgway.com/blog_E/2022/10/03/accelerator-systems-for-ai/