NVMe IP Core Datasheet

Integrated Block for PCI Express

Control interface of dgIF typeS

Core Facts |

|

|

Provided with Core |

|

|

Documentation |

Reference Design Manual Demo Instruction Manual |

|

Design File Formats |

Encrypted File |

|

Instantiation Templates |

VHDL |

|

Reference Designs & Application Notes |

Vivado Project, See Reference Design Manual |

|

Additional Items |

Demo on AC701, KC705, VC707, VC709, ZC706, Zynq Mini-ITX, KCU105, KCU116, ZCU106, ZCU111, VCU118, VCU128 |

|

Support |

|

|

Support Provided by Design Gateway Co., Ltd. |

|

Design Gateway Co.,Ltd

E-mail: ip-sales@design-gateway.com

URL: design-gateway.com

Features

· Direct NVMe SSD access without the need for CPU or external memory

· Include 256 KB data buffer by BlockRAM

· Simple user interface through dgIF typeS

· Support seven commands: Identify, Shutdown, Write, Read, SMART, Secure Erase, and Flush

· Supported NVMe devices

· Base Class Code: 01h (mass storage), Sub Class Code:08h (Non-volatile), Programming Interface:02h (NVMHCI)

· MPSMIN (Memory Page Size Minimum): 0 (4KB)

· MDTS (Maximum Data Transfer Size): At least 5 (128 KB) or 0 (no limitation)

· LBA unit: 512 bytes or 4 KB

· User clock frequency: At least the PCIe clock frequency (125MHz for Gen2, 250MHz for Gen3)

· PCIe Hard IP interface: 128-bit AXI4 interface, configured by 4-lane PCIe Gen2 or Gen3

· Available reference design

· 1-ch demo on AC701, KC705, VC707, VC709, ZC706, Zynq Mini-ITX, KCU105, KCU116, ZCU106, ZCU111, VCU118, and VCU128 with AB17-M2FMC, AB18-PCIeX16, or AB19-M2PCI adapter board

· 1-ch with DDR for sustain data transfer on KCU105

· 2-ch/4-ch RAID0 demo on KCU105 and VCU118

· Customized service for following features

· Additional NVMe commands

· RAM size or RAM type (URAM) modification

Table 1: Example Implementation Statistics for 7-Series device (PCIe Gen2/Gen3)

|

Family |

Example Device |

PCIe Speed |

Fmax (MHz) |

Slice Regs |

Slice LUTs |

Slices |

IOB |

BRAMTile1 |

PLL |

GTX |

Design Tools |

|

Virtex-7 |

XC7VX690TFFG1761-2 |

Gen3 |

300 |

4261 |

3194 |

1303 |

- |

66 |

- |

- |

Vivado2019.1 |

|

Virtex-7 |

XC7VX485TFFG1761-2 |

Gen2 |

300 |

4261 |

3196 |

1370 |

- |

66 |

- |

- |

Vivado2019.1 |

|

Zynq-7000 |

XC7Z045FFG900-2 |

Gen2 |

300 |

4261 |

3195 |

1350 |

- |

66 |

- |

- |

Vivado2019.1 |

|

Kintex-7 |

XC7K325TFFG900-2 |

Gen2 |

300 |

4261 |

3196 |

1429 |

- |

66 |

- |

- |

Vivado2019.1 |

|

Artix-7 |

XC7A200TFBG676-2 |

Gen2 |

210 |

4261 |

2657 |

1285 |

- |

66 |

- |

- |

Vivado2019.1 |

Note: Actual logic resource dependent on percentage of unrelated logic

Table 2: Example Implementation Statistics for Ultrascale/Ultrascale+ device (PCIe Gen3)

|

Family |

Example Device |

Fmax (MHz) |

CLB Regs |

CLB LUTs |

CLB |

IOB |

BRAMTile1 |

PLL |

GTX |

Design Tools |

|

Kintex-Ultrascale |

XCKU040FFVA1156-2E |

375 |

4165 |

2718 |

814 |

- |

66 |

- |

- |

Vivado2017.4 |

|

Kintex-Ultrascale+ |

XCKU5P-FFVB676-2E |

400 |

4165 |

2737 |

834 |

- |

66 |

- |

- |

Vivado2017.4 |

|

Zynq-Ultrascale+ |

XCZU7EV-FFVC1156-2E |

400 |

4165 |

2732 |

880 |

- |

66 |

- |

- |

Vivado2017.4 |

|

Virtex-Ultrascale+ |

XCVU9P-FLGA2104-2L |

400 |

4165 |

2734 |

815 |

- |

66 |

- |

- |

Vivado2017.4 |

Note: Actual logic resource dependent on percentage of unrelated logic

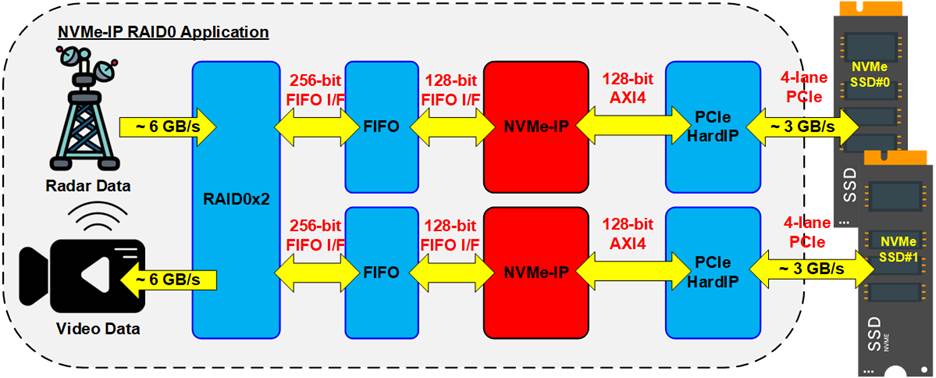

Applications

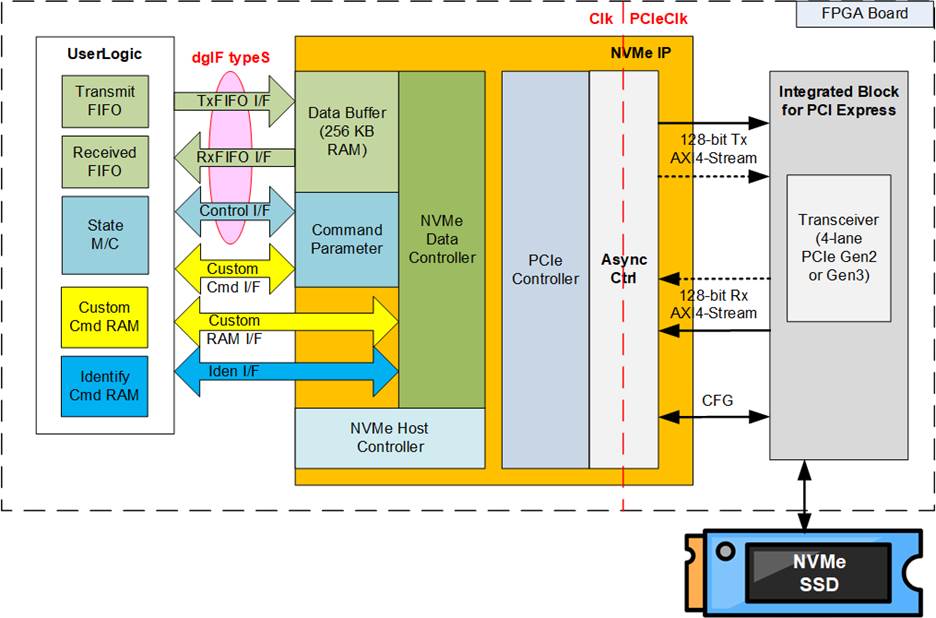

Figure 1: NVMe IP Application

The NVMe IP Core integrated with Integrated Block for PCI Express (PCIe hard IP) from Xilinx provides an ideal solution for accessing NVMe SSD without the need for CPU or external memory, including DDR. To increase the transfer performance, RAID0 design is applied by using multple NVMe IPs and PCIe hard IPs, as shown in Figure 1. Using two-channel RAID0 system by two NVMe IPs and two NVMe SSDs increases transfer speed up to two times (6 GB/s if transfer speed of each SSD is 3 GB/s). This can accommodate high-speed data from the radar systems or video data streams.

We also offer alternative IP cores for specific applications such as Multiple users, Random acces, and PCIe switch.

Multiple User NVMe IP Core Enables multiple users to access an NVMe SSD for high-performance write and read operation simultaneously.

https://dgway.com/muNVMe-IP_X_E.html

Random Access by Multiple User NVMe IP Core Enables two users to write and read to the same NVMe SSD simultaneously, providing high random-access performance for applications with non-contiguous storage requirements.

https://dgway.com/rmNVMe-IP_X_E.html

NVMe IP Core for PCIe Switch Access multiple NVMe SSDs via PCIe switch to extend storage capacity. Enables high-speed write and read access to shared storage.

https://dgway.com/NVMe-IP_X_E.html

NVMe IP Core with PCIe Soft IP Access the NVMe SSD without using PCIe hard IP, bypassing the need for PCIe hard IP

https://dgway.com/NVMeG4-IP_X_E.html

General Description

Figure 2: NVMe IP Block Diagram

The NVMe IP is a complete host controller solution that enables access to an NVMe SSD using the NVM express standard. The physical interface for the NVMe SSD is PCIe, and the lower layer hardware is implemented using Integrated Block for PCI Express (PCIe hard IP) from Xilinx.

The NVMe IP core implements NVMe commands, including Identify, Shutdown, Write, Read, SMART, Secure Erase, and Flush command. It utilizes two user interface groups to transfer commands and data. The Control interface is used for transferring commands and their parameters, while the Data interface is used for transferring data when required by the command. For Write/Read commands, the Control interface and Data interface use dgIF typeS, which is our standard interface for the storage. The Control interface of dgIF typeS includes start address, transfer length, and request signals, and the Data interface uses the standard FIFO interface.

If abnormal conditions are detected during initialization or certain command operation, the NVMe IP may assert an error signal. The error status can be read from the IP for more details. Once the error cause is resolved, both the NVMe IP and SSD must be reset.

To ensure continuous packet transmission until the end of the packet on the user interface of PCIe hard IP, the user logic clock frequency must be equal to or greater than the PCIe clock frequency (250 MHz for PCIe Gen3, 125MHz for PCIe Gen2). This requires that data is valid every clock cycle between the start and the end of the frame. Therefore, it can guarantee that the bandwidth on the user interface is equal to or greater than PCIe hard IP bandwidth.

Overall, the NVMe IP provides a comprehensive solution for accessing NVMe SSDs. The IP core comes with reference designs on FPGA evaluation boards, allowing users to evaluate the products capabilities before making a purchase. The reference designs on FPGA evaluation boards are available for evaluation before purchasing.

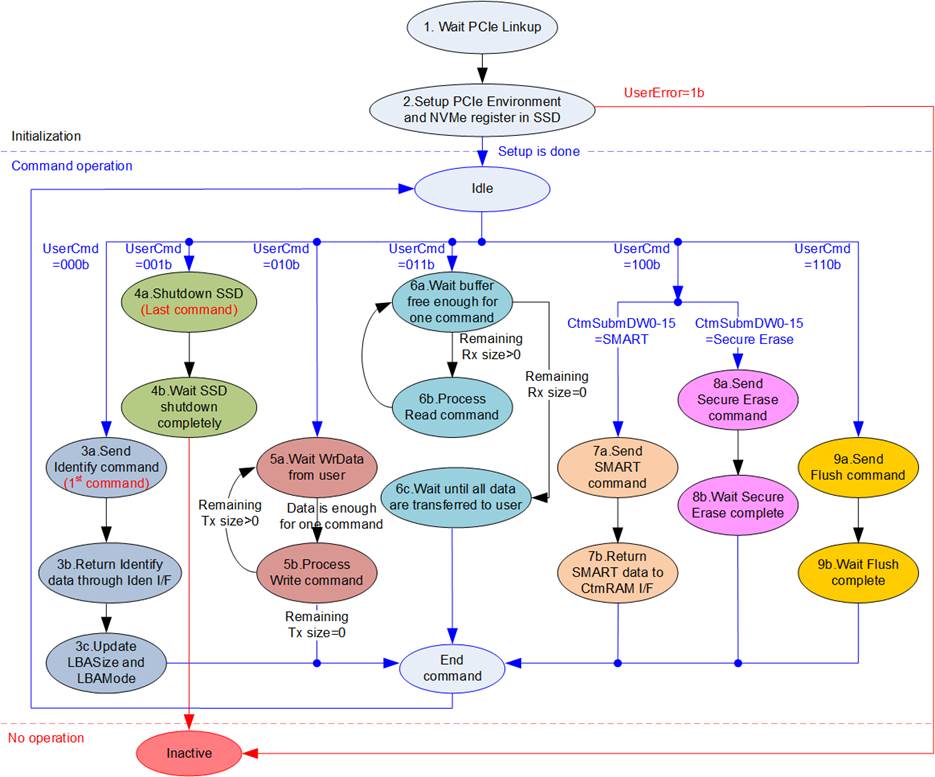

Functional Description

The NVMe IP operation is divided into three phases, including IP initialization, Operating command, and Inactive status, as shown in Figure 3. Upon de-asserting the IP reset, the initialization phase begins, and the user should execute the Identify command to check the device status and capacity. During the Operating command phase, the user can perform write and read operations, execute Custom commands such as SMART and Flush. Finally, before shutting down the system, it is recommended to execute the Shutdown command to ensure safe operation.

Figure 3: NVMe IP Operation Flow

The operation flow of the NVMe IP can be described in the following steps.

1) The IP waits for PCIe to be ready by monitoring Linkup status from the PCIe IP core.

2) The IP begins the initialization process by configuring PCIe and NVMe registers. Upon successful completion of the initialization, the IP transitions to the Idle state, where it awaits new command request from the user. If any errors during the initialization process are detected, the IP switches to the Inactive state, with UserError set to 1b.

3) The first command from the user must be the Identify command (UserCmd=000b), which updates the LBASize (disk capacity) and LBAMode (LBA unit=512 bytes or 4 KB).

4) The last command before powering down the system must be Shutdown command (UserCmd=001b). This command is recommended to guarantee the SSD is powered down in a proper sequence. Without the Shutdown command, the write data in the SSD cannot be guaranteed. After finishing the Shutdown command, both the NVMe IP and SSD change to the Inactive state, and no new command can be executed until the IP is reset.

a) The IP waits until the write data, sent by the user, is sufficient for one command. The transfer size of each command in the NVMe IP is 128 KB, except for the last loop, which may be less than 128 KB.

b) The IP sends the Write command to the SSD and then waits until the status response from the SSD. The IP returns to the Idle state only when all the data has been completely transferred. If not, the IP goes back to step 5a) to send the next Write command.

6) Similar to the Write command, when executing a Read command (UserCmd=011b) with a transfer size exceeding 128 KB, the IP must iterate through the following steps, 6a) 6b).

a) If the remaining transfer size is zero, the IP proceeds to step 6c). Otherwise, it waits until there is sufficient free space in the Data buffer of the NVMe IP for one command (either 128 KB or the remaining transfer size for the last loop).

b) The IP sends the Read command to the SSD and then returns to step 6a).

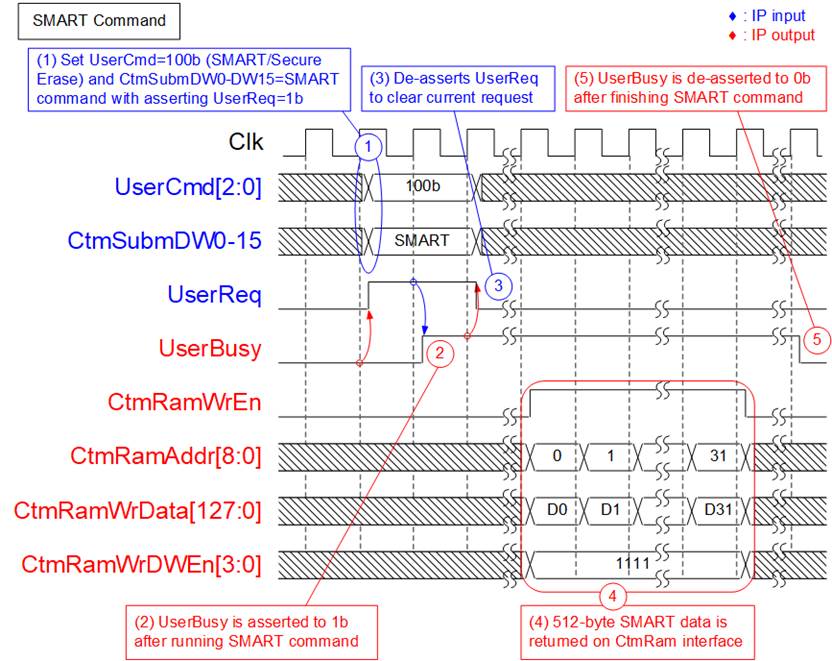

7) When executing a SMART command (UserCmd=100b and CtmSubmDW0-15=SMART), 512-byte data is returned upon operation completion.

a) The IP sends the Get Log Page command to retrieve SMART/Health information from the SSD.

b) The 512-byte data response is received from the SSD, and the IP forwards this data through the Custom command RAM interface (CtmRamAddr=0x000 0x01F).

8) For the Secure Erase command (UserCmd=100b and CtmSubmDW0-15=Secure Erase), no data transfer occurs during the operation.

a) The IP sends the Secure Erase command to the SSD.

b) The IP waits until the SSD returns a status response to confirm the completion of the operation.

9) For the Flush command (UserCmd=110b), no data transfer occurs during the operation.

a) The IP sends the Flush command to the SSD.

b) The IP waits until the SSD returns a status response to confirm the completion of the operation.

The design of an NVMe host controller consists of two protocols: NVMe and PCIe. The NVMe protocol is used to interface with the user, while the PCIe protocol is used to interface with PCIe hard IP. Figure 2 shows the hardware inside the NVMe IP which is split into two groups, NVMe and PCIe.

NVMe

The NVMe group supports seven commands, which are split into two categories - Admin commands and NVM commands. Admin commands include Identify, Shutdown, SMART, and Secure Erase, while NVM commands include Write, Read, and Flush. After executing a command, the status returned from the SSD is latched either to AdmCompStatus (for status returned from Admin commands) or IOCompStatus (for status returned from NVM commands), depending on the command type.

The parameters of Write or Read command are configured through the Control interface of dgIF typeS, while the parameters of SMART, Secure Erase or Flush command are set by CtmSubmDW0-15 of the Ctm Cmd interface. The Data interface for Write or Read command is transferred using the FIFO interface, a part of dgIF typeS. The data for Write and Read commands is stored in the IPs Data buffer. For other command types, the Data interface utilizes distinct interfaces - Identify I/F for the Identify command and Custom RAM I/F for the SMART command.

Further details of each submodule are described as follows.

· NVMe Host Controller

The NVMe host controller serves as the core controller within the NVMe IP. It operates in two phases: the initialization phase and the command operation phase. The initialization phase runs once when the system is booted up, for configuring the NVMe register within the SSD. Once the initialization phase is completed, it enters the command operation phase. During this phase, the controller controls the sequence of transmitted and received packets for each command.

To initiate the execution of each command, the command parameters are stored in the Command Parameter, facilitating packet creation. Subsequently, the packet is forwarded to the AsyncCtrl for converting NVMe packets into PCIe packets. After each command operation is executed, a status packet is received from the SSD. The controller decodes the status value, verifying whether the operation was completed successfully or an error occurred. In cases where the command involves data transfer, such as Write or Read command, the controller must handle the order of data packets, which are created and decoded by the NVMe Data controller.

· Command Parameter

The Command Parameter module creates the command packet sent to the SSD and decodes the status packet returned from the SSD. The input and output of this module are controlled by the NVMe host controller. Typically, a command consists of 16 Dwords (1 Dword = 32 bits). When executing Identify, Shutdown, Write, and Read commands, all 16 Dwords are created by the Command parameter module, which are initialized by the user inputs on dgIF typeS. When executing SMART, Secure Erase and Flush commands, all 16 Dwords are directly loaded via CtmSubmDW0-CtmSubmDW15 of Ctm Cmd interface.

· Data Buffer

256 KB simple dual port RAM is implemented by BlockRAM to be data buffer. The buffer stores data for transferring between UserLogic and SSD while operating Write and Read commands.

· NVMe Data Controller

The NVMe data controller module is used when the command must transfer data such as Identify, SMART, Write, and Read commands. This module manages three data interfaces for transferring with the SSD.

1) The FIFO interface is used with the Data buffer during the execution of Write or Read commands.

2) The Custom RAM interface is used when executing SMART command.

3) The Identify interface is used when executing Identify command.

The NVMe data controller is responsible for creating and decoding of data packets. Similar to the Command Parameter module, the input and output signals of the NVMe data controller module are controlled by the NVMe host controller.

PCIe

The PCIe protocol is the outstanding low-layer protocol for the high-speed application, and the NVMe protocol runs over it. Therefore, the NVMe layer can be operated after the PCIe layer completes the initialization. Two modules are designed to support the PCIe protocol - PCIe controller and AsyncCtrl. Additional details of these modules are provided below.

· PCIe Controller

In initialization process, the PCIe controller sets up the PCIe environment of the SSD via the CFG interface. Subsequently, the PCIe packet is created or decoded through 128-bit Tx/Rx AXI4-Stream. The PCIe controller converts the command packet and data packet from the NVMe module into a PCIe packet, and vice versa.

· AsyncCtrl

AsyncCtrl incorporates asynchronous registers and buffers designed to facilitate clock domain crossing. The user clock frequency must match or exceed the PCIe clock frequency to ensure sufficient bandwidth for continuous packet data transmission. The majority of the logic within the NVMe IP operates in the user clock domain, while the PCIe hard IP operates in the PCIe clock domain.

User Logic

When executing the SMART and Identify commands, each data output interface connects to a simple dual port RAM with byte enable capability. Both the FIFO and RAM have a data width of 128 bits, while their memory depth can be configured to different values. Specifically, the data size for the Identify command is 8 KB, while for the SMART command, it is 512 bytes.

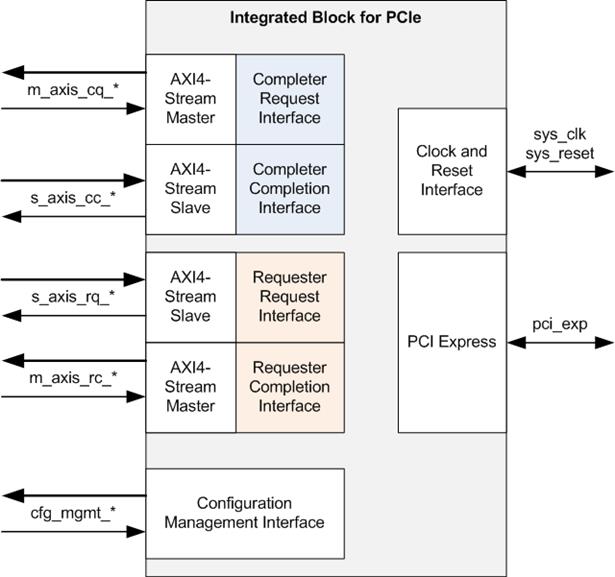

Integrated Block for PCI Express

Xilinx provides the Integrated Block for PCI Express as hard IP for some of its FPGAs. The number of SSDs that can be connected to a single FPGA device is restricted by the number of PCIe hard IPs present on the FPGA. By using the NVMe IP, each PCIe hard IP can be used to connect to a single NVMe SSD. For more information regarding the PCIe hard IP, please refer to the following documents.

PG054: 7 Series FPGAs Integrated Block for PCI Express

PG023: Virtex-7 FPGA Gen3 Integrated Block for PCI Express

PG156: UltraScale Devices Gen3 Integrated Block for PCI Express

PG213: UltraScale+ Devices Integrated Block for PCI Express

Figure 4: Integrated Block for PCI Express (UltraScale+)

Core I/O Signals

Descriptions of the I/O signals are provided in Table 3 - Table 5.

Table 3: User logic I/O Signals (Synchronous to Clk signal)

|

Signal |

Dir |

Description |

|

Control I/F of dgIF typeS |

||

|

RstB |

In |

Synchronous reset. Active low. It should be de-asserted to 1b when the Clk signal is stable. |

|

Clk |

In |

User clock to run the NVMe IP. The frequency of this clock must be equal to or greater than the PCIeClk frequency, which is output from the PCIe hard IP. PCIeClk frequency is equal to 250 MHz for PCIe Gen3 or 125 MHz for PCIe Gen2. |

|

UserCmd[2:0] |

In |

User Command. Valid when UserReq=1b. The possible values are 000b: Identify, 001b: Shutdown, 010b: Write SSD, 011b: Read SSD, 100b: SMART/Secure Erase, 110b: Flush, 101b/111b: Reserved |

|

UserAddr[47:0] |

In |

The start address to write/read from the SSD in 512-byte units. Valid when UserReq=1b. If the LBA unit = 4 KB, UserAddr[2:0] must always be set to 000b to align with 4 KB unit. If the LBA unit = 512 bytes, it is recommended to set UserAddr[2:0]=000b to align with 4 KB size (SSD page size). The 4KB address unalignment results in reduced write/read performance for most SSDs. |

|

UserLen[47:0] |

In |

The total transfer size to write/read from the SSD in 512-byte units. Valid from 1 to (LBASize-UserAddr). If the LBA unit = 4 KB, UserLen[2:0] must always be set to 000b to align with the 4 KB unit. This parameter is applicable when UserReq=1b. |

|

UserReq |

In |

Set to 1b to initiate a new command request and reset to 0b after the IP starts the operation, signaled by setting UserBusy to 1b. This signal can only be asserted when the IP is an Idle state (UserBusy=0b). Command parameters, including UserCmd, UserAddr, UserLen, and CtmSubmDW0-DW15, must be valid and stable when UserReq=1b. UserAddr and UserLen are inputs for Write/Read commands while CtmSubmDW0-DW15 are inputs for SMART, Secure Erase, or Flush commands. |

|

UserBusy |

Out |

Set to 1b when the IP is busy. New request must not be sent (UserReq to 1b) when the IP is busy. |

|

LBASize[47:0] |

Out |

The total capacity of the SSD in 512-byte units. Default value is 0. This value is valid after finishing the Identify command. |

|

LBAMode |

Out |

The LBA unit size of the SSD (0b: 512bytes, 1b: 4 KB). Default value is 0. This value is valid after finishing the Identify command. |

|

UserError |

Out |

Error flag. Asserted to 1b when the UserErrorType is not equal to 0. The flag can be de-asserted to 0b by asserting RstB to 0b. |

|

UserErrorType[31:0] |

Out |

Error status. [0] An error when PCIe class code is incorrect. [1] An error from Controller capabilities (CAP) register, which can occur due to various reasons. - Memory Page Size Minimum (MPSMIN) is not equal to 0. - NVM command set flag (bit 37 of CAP register) is not set to 1. - Doorbell Stride (DSTRD) is not equal to 0. - Maximum Queue Entries Supported (MQES) is less than 7. More details of each register can be found in the NVMeCAPReg signal. [2] An error when the Admin completion entry is not received within the specified timeout. [3] An error when the status register in the Admin completion entry is not 0 or when the phase tag/command ID is invalid. More details can be found in the AdmCompStatus signal. [4] An error when the IO completion entry is not received within the specified timeout. |

|

Signal |

Dir |

Description |

|

Control I/F of dgIF typeS |

||

|

UserErrorType[31:0] |

Out |

[5] An error when the status register in the IO completion entry is not 0 or when the phase tag is invalid. More details can be found in the IOCompStatus signal. [6] An error when the Completion TLP packet size is incorrect. [7] An error when PCIe hard IP detects an Error correction code (ECC) error from the internal buffer. [8] An error from the Unsupported Request (UR) flag in the Completion TLP packet. [9] An error from the Completer Abort (CA) flag in the Completion TLP packet. [15:10] Reserved [16] An error from an unsupported LBA unit (LBA unit is not equal to 512 bytes or 4 KB) [31:17] Reserved Note: Timeout period of bit[2]/[4] is determined by the TimeOutSet input. |

|

Data I/F of dgIF typeS |

||

|

UserFifoWrCnt[15:0] |

In |

Write data counter for the Receive FIFO. Used to monitor the FIFO full status. When the FIFO becomes full, data transmission from the Read command temporarily halts. If the data count of FIFO is less than 16 bits, the upper bits should be padded with 1b to complete the 16-bit count. |

|

UserFifoWrEn |

Out |

Asserted to 1b to write data to the Receive FIFO when executing the Read command. |

|

UserFifoWrData[127:0] |

Out |

Write data bus of the Receive FIFO. Valid when UserFifoWrEn=1b. |

|

UserFifoRdCnt[15:0] |

In |

Read data counter for the Transmit FIFO. Used to monitor the amount of data stored in the FIFO. If the counter indicates an empty status, the transmission of data packets for the Write command temporarily pauses. When the data count of FIFO is less than 16 bits, the upper bis should be padded with 0b to complete the 16-bit count. |

|

UserFifoEmpty |

In |

Unused for this IP. |

|

UserFifoRdEn |

Out |

Asserted to 1b to read data from the Transmit FIFO when executing the Write command. |

|

UserFifoRdData[127:0] |

In |

Read data returned from the Transmit FIFO. Valid in the next clock after UserFifoRdEn is asserted to 1b. |

|

NVMe IP Interface |

||

|

IPVesion[31:0] |

Out |

IP version number |

|

TestPin[31:0] |

Out |

Reserved to be the IP Test point. |

|

TimeOutSet[31:0] |

In |

Timeout value to wait for completion from SSD. The time unit is equal to 1/(Clk frequency). When TimeOutSet is equal to 0, Timeout function is disabled. |

|

PCIeLinkup |

In |

Set to 1b when LTSSM state of PCIe hard IP is in L0 State. |

|

AdmCompStatus[15:0] |

Out |

Status output from Admin Completion Entry [0] Set to 1b when the Phase tag or Command ID in Admin completion entry is invalid. [15:1] Status field value of Admin completion entry |

|

IOCompStatus[15:0] |

Out |

Status output from IO Completion Entry [0] Set to 1b when Phase tag in IO completion entry is invalid. [15:1] Status field value of IO completion entry |

|

NVMeCAPReg[31:0] |

Out |

The parameter value of the NVMe capability register when UserErrorType[1] is asserted to 1b. [15:0] Maximum Queue Entries Supported (MQES) [19:16] Doorbell Stride (DSTRD) [20] NVM command set flag [24:21] Memory Page Size Minimum (MPSMIN) [31:25] Undefined |

|

Signal |

Dir |

Description |

|

Identify Interface |

||

|

IdenWrEn |

Out |

Asserted to 1b for sending data output from the Identify command. |

|

IdenWrDWEn[3:0] |

Out |

Dword (32-bit) enable of IdenWrData. Valid when IdenWrEn=1b. 1b: This Dword data is valid, 0b: This Dword data is not available. Bit[0], [1], [2], and [3] correspond to IdenWrData[31:0], [63:32], [95:64], and [127:96], respectively. |

|

IdenWrAddr[8:0] |

Out |

Index of IdenWrData in 128-bit unit. Valid when IdenWrEn=1b. 0x000-0x0FF: 4KB Identify controller data 0x100-0x1FF: 4KB Identify namespace data. |

|

IdenWrData[127:0] |

Out |

4Kbyte Identify controller data or Identify namespace data. Valid when IdenWrEn=1b. |

|

Custom Interface (Command and RAM) |

||

|

CtmSubmDW0[31:0] CtmSubmDW15[31:0] |

In |

16 Dwords of Submission queue entry for SMART, Secure Erase, or Flush command. DW0: Command Dword0, DW1: Command Dword1, , and DW15: Command Dword15. These inputs must be valid and stable when UserReq=1b and UserCmd=100b (SMART/Secure Erase) or 110b (Flush). |

|

CtmCompDW0[31:0] CtmCompDW3[31:0] |

Out |

4 Dwords of Completion queue entry, output from SMART, Secure Erase, or Flush command. DW0: Completion Dword0, DW1: Completion Dword1, , and DW3: Completion Dword3 |

|

CtmRamWrEn |

Out |

Asserted to 1b for sending data output from Custom command such as SMART command. |

|

CtmRamWrDWEn[3:0] |

Out |

Dword (32-bit) enable of CtmRamWrData. Valid when CtmRamWrEn=1b. 1b: This Dword data is valid, 0b: This Dword data is not available. Bit[0], [1], [2], and [3] correspond to CtmRamWrData[31:0], [63:32], [95:64], and [127:96], respectively. |

|

CtmRamAddr[8:0] |

Out |

Index of CtmRamWrData when SMART data is received. Valid when CtmRamWrEn=1b. (Optional) Index to request data input through CtmRamRdData for customized Custom commands. |

|

CtmRamWrData[127:0] |

Out |

512-byte data output from SMART command. Valid when CtmRamWrEn=1b. |

|

CtmRamRdData[127:0] |

In |

(Optional) Data input for customized custom commands. |

Table 4: Physical I/O Signals for PCIe Gen3 Hard IP (Synchronous to PCIeClk)

|

Signal |

Dir |

Description |

|

PCIe Gen3 hard IP |

||

|

PCIeRstB |

In |

Synchronous reset signal. Active low. De-assert to 1b when PCIe hard IP is not in reset state. |

|

PCIeClk |

In |

Clock output from PCIe hard IP (250 MHz for PCIe Gen3). |

|

Configuration Management Interface |

||

|

PCIeCfgDone |

In |

Read/Write operation complete. Asserted for 1 cycle when operation completes. |

|

PCIeCfgRdEn |

Out |

Read enable. Asserted to 1b for a read operation. |

|

PCIeCfgWrEn |

Out |

Write enable. Asserted to 1b for a write operation. |

|

PCIeCfgWrData[31:0] |

Out |

Write data which is used to configure the Configuration and Management registers. |

|

PCIeCfgByteEn[3:0] |

Out |

Byte enable for write data, where bit[0] corresponds to PCIeCfgWrData[7:0], and so on. |

|

PCIeCfgAddr[18:0] |

Out |

Read/Write Address. |

|

Requester Request Interface |

||

|

PCIeMtTxData[127:0] |

Out |

Requester request data bus. |

|

PCIeMtTxKeep[3:0] |

Out |

Bit [i] indicates that Dword [i] of PCIeMtTxData contains valid data. |

|

PCIeMtTxLast |

Out |

Asserts this signal in the last cycle of a TLP to indicate the end of the packet. |

|

PCIeMtTxReady[3:0] |

In |

Asserts to accept data. Data is transferred when both PCIeMtTxValid and PCIeMtTxReady are asserted in the same cycle. |

|

PCIeMtTxUser[59:0] |

Out |

Requester request user data. Valid when PCIeMtTxValid is high. |

|

PCIeMtTxValid |

Out |

Asserts to drive valid data on PCIeMtTxData bus. NVMe IP keeps the valid signal asserted during the transfer of a packet. |

|

Completer Request Interface |

||

|

PCIeMtRxData[127:0] |

In |

Received data from PCIe hard IP. |

|

PCIeMtRxKeep[3:0] |

In |

Bit [i] indicates that Dword [i] of PCIeMtRxData contains valid data. |

|

PCIeMtRxLast |

In |

Asserts this signal in the last beat of a packet to indicate the end of the packet. |

|

PCIeMtRxReady |

Out |

Indicates that NVMe IP is ready to accept data. |

|

PCIeMtRxUser[74:0] |

In |

Sideband information for the TLP being transferred. Valid when PCIeMtRxValid is high. |

|

PCIeMtRxValid |

In |

Assert when PCIe hard IP drives valid data on PCIeMtRxData bus. PCIe hard IP keeps the valid signal asserted during the transfer of packet. |

|

Completer Completion Interface |

||

|

PCIeSlTxData[127:0] |

Out |

Completion data from NVMe IP. |

|

PCIeSlTxKeep[3:0] |

Out |

Bit [i] indicates that Dword [i] of PCIeSlTxData contains valid data. |

|

PCIeSlTxLast |

Out |

Asserts this signal in the last cycle of a packet to indicate the end of the packet. |

|

PCIeSlTxReady[3:0] |

In |

Indicates that PCIe hard IP is ready to accept data. |

|

PCIeSlTxUser[32:0] |

Out |

Sideband information for the TLP being transferred. Valid when PCIeSlTxValid is high. |

|

PCIeSlTxValid |

Out |

Asserts to drive valid data on PCIeSlTxData bus. NVMe IP keeps the valid signal asserted during the transfer of a packet. |

|

Requester Completion Interface |

||

|

PCIeSlRxData[127:0] |

In |

Received data from PCIe hard IP. |

|

PCIeSlRxKeep[3:0] |

In |

Bit [i] indicates that Dword [i] of PCIeSlRxData contains valid data. |

|

PCIeSlRxLast |

In |

Asserts this signal in the last beat of a packet to indicate the end of the packet. |

|

PCIeSlRxReady |

Out |

Indicates that NVMe IP is ready to accept data. |

|

PCIeSlRxUser[84:0] |

In |

Sideband information for the TLP being transferred. Valid when PCIeSlRxValid is high. |

|

PCIeSlRxValid |

In |

Asserts when PCIe hard IP drives valid data on PCIeSlRxData bus. PCIe hard IP keeps the valid signal asserted during the transfer of packet. |

Table 5: Physical I/O Signals for PCIe Gen2 Hard IP (Synchronous to PCIeClk)

|

Signal |

Dir |

Description |

|

PCIe Gen2 hard IP |

||

|

PCIeRstB |

In |

Synchronous reset signal. Active low. De-assert to 1b when PCIe hard IP is not in reset state. |

|

PCIeClk |

In |

Clock output from PCIe hard IP (125 MHz for PCIe Gen2). |

|

Configuration Management Interface |

||

|

PCIeCfgDone |

In |

Read/Write operation complete. Asserted for 1 cycle when operation completes. |

|

PCIeCfgRdEn |

Out |

Read enable. Asserted to 1b for a read operation. |

|

PCIeCfgWrEn |

Out |

Write enable. Asserted to 1b for a write operation. |

|

PCIeCfgWrData[31:0] |

Out |

Write data which is used to configure the Configuration and Management registers. |

|

PCIeCfgByteEn[3:0] |

Out |

Byte enable for write data, where bit[0] corresponds to PCIeCfgWrData[7:0], and so on. |

|

PCIeCfgAddr[9:0] |

Out |

Read/Write Address. |

|

PCIe Transmit Interface |

||

|

PCIeTxData[127:0] |

Out |

Transmitted data to PCIe hard IP. |

|

PCIeTxKeep[15:0] |

Out |

Bit[i] indicates that Byte[i] of PCIeTxData contains valid data. |

|

PCIeTxLast |

Out |

Asserts this signal in the last cycle of a TLP to indicate the end of the packet. |

|

PCIeTxReady[3:0] |

In |

Asserts to accept data. Data is transferred when both PCIeTxValid and PCIeTxReady are asserted in the same cycle. |

|

PCIeTxUser[3:0] |

Out |

Bit[3]: Transmit source discontinue. Bit[2]: Transmit streamed. Bit[1]: Transmit error forward. Bit[0]: Transmit ECRC Generate. Always set to 0000b. |

|

PCIeTxValid |

Out |

Indicates that NVMe IP is presenting valid data on PCIeTxData. |

|

PCIe Receive Interface |

||

|

PCIeRxData[127:0] |

In |

Received data from PCIe hard IP. Valid only PCIeRxValid is also asserted. |

|

PCIeRxKeep[15:0] |

In |

Bit[i] indicates that Byte[i] of PCIeRxData contains valid data. |

|

PCIeRxLast |

In |

Received End-of-Frame. Valid only if PCIeRxValid is also asserted. |

|

PCIeRxReady |

Out |

Indicates that NVMe IP is ready to accept data. |

|

PCIeRxUser[21:0] |

In |

Bit[0]: Received ECRC error. Bit[14:13]: Indicates the start of a new packet header in PCIeRxData. Bit[21]: Indicates the end of a packet in PCIeRxData. Other bits are ignored in NVMe IP. |

|

PCIeRxValid |

In |

Assert when PCIe hard IP drives valid data on PCIeRxData bus. PCIe hard IP keeps the valid signal asserted during the transfer of packet. |

Timing Diagram

Initialization

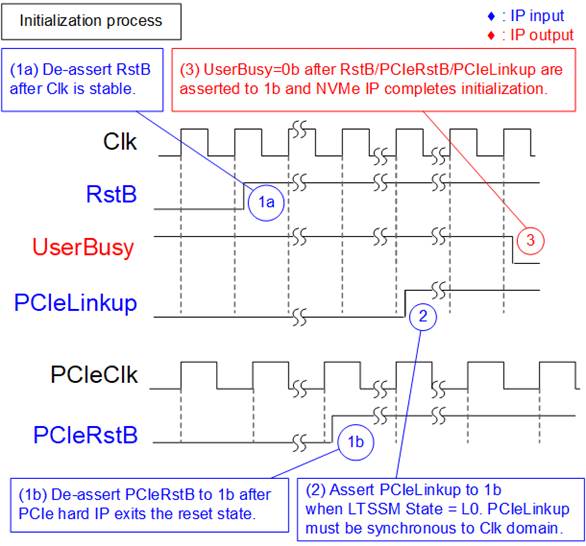

Figure 5: Timing diagram during initialization process

The initialization process of the NVMe IP follows the steps below as shown in timing diagram.

1) De-asserts the RstB and PCIeRstB.

a) De-assert RstB to 1b once the Clk signal is stable.

b) De-assert PCIeRstB to 1b upon the completion of the PCIe reset sequence. Following this, the PCIe hard IP is ready to transfer data with the application layer.

2) After the LTSSM state of the PCIe hard IP is L0 state, assert PCIeLinkup to 1b. Asynchronous register must be used because PCIeLinkup, which requires to run on Clk domain, is decoded from the LTSSM signal generated on PCIeClk. After this, the NVMe IP initiates its own initialization process.

3) Upon completion of the NVMe IP initialization process, the NVMe IP de-asserts UserBusy to 0b.

After completing all of the above steps, the NVMe IP is ready to receive commands from the user.

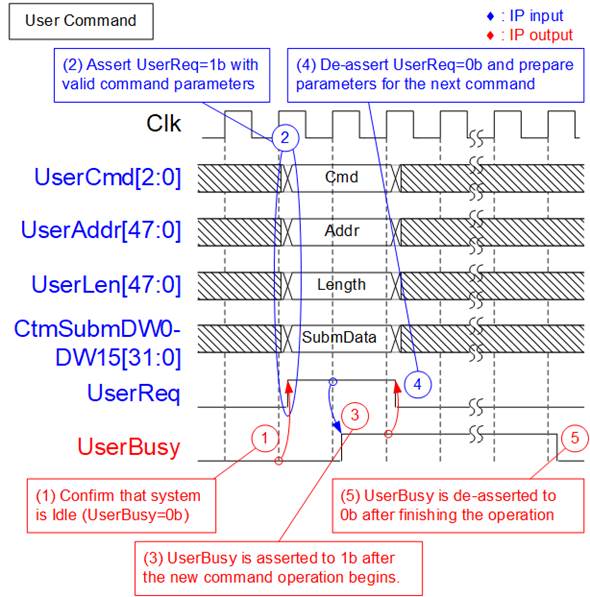

Control interface of dgIF typeS

The dgIF typeS signals can be split into two groups: the Control interface for sending commands and monitoring status, and the Data interface for transferring data streams in both directions.

Figure 6 shows an example of how to send a new command to the IP via the Control interface of dgIF typeS.

Figure 6: Control Interface of dgIF typeS timing diagram

1) UserBusy must be equal to 0b before sending a new command request to confirm that the IP is Idle.

2) Command and its parameters such as UserCmd, UserAddr, and UserLen must be valid when asserting UserReq to 1b to send the new command request.

3) IP asserts UserBusy to 1b after starting the new command operation.

4) After UserBusy is asserted to 1b, UserReq is de-asserted to 0b to finish the current request. New parameters for the next command can be prepared on the bus. UserReq for the new command must not be asserted to 1b until the current command operation is finished.

5) UserBusy is de-asserted to 0b after the command operation is completed. Next, new command request can be initiated by asserting UserReq to 1b.

Note: The number of parameters used in each command is different. More details are described below.

- Write and Read commands: UserCmd, UserAddr, and UserLen

- SMART, Secure Erase, and Flush commands: UserCmd and CtmSubmDW0-DW15

- Identify and Shutdown commands: UserCmd

Data interface of dgIF typeS

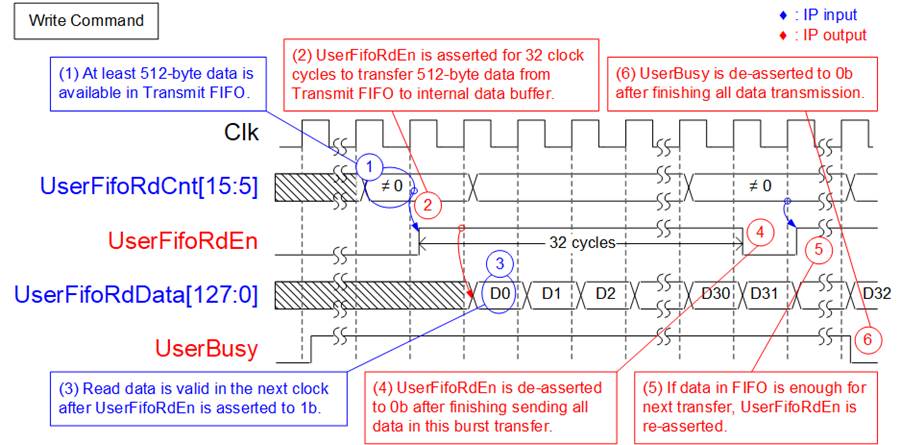

The Data interface of dgIF typeS is used for transferring data stream when operating Write or Read command, and it is compatible with a general FIFO interface. Figure 7 shows the data interface of dgIF typeS when transferring Write data to the IP in the Write command.

Figure 7: Transmit FIFO Interface for Write command

The 16-bit FIFO read data counter (UserFifoRdCnt) shows the total amount of data stored, and if the amount of data is sufficient, 512-byte data (32x128-bit) is transferred.

In the Write command, data is read from the Transmit FIFO until the total data is transferred completely, and the process to transfer data is described as follows.

1) Before starting a new burst transfer, the IP waits until at least 512-byte data is available in the Transmit FIFO by monitoring UserFifoRdCnt[15:5] that must be not equal to 0.

2) The IP asserts UserFifoRdEn to 1b for 32 clock cycles to read 512-byte data from the Transmit FIFO.

3) UserFifoRdData is valid in the next clock cycle after asserting UserFifoRdEn to 1b, and 32 data are continuously transferred.

4) After reading the 32nd data (D31), UserFifoRdEn is de-asserted to 0b.

5) Repeat steps 1) 4) to transfer the next 512-byte data until total amount of data size is equal to the transfer size specified in the command.

6) After the total data is completely transferred, UserBusy is de-asserted to 0b.

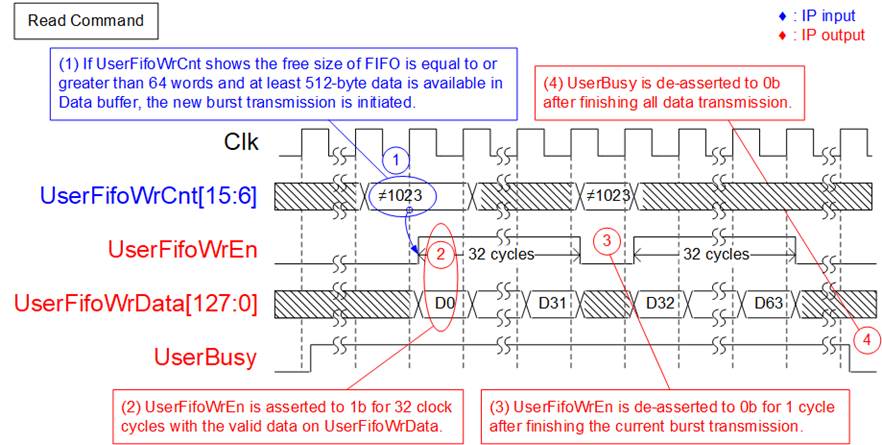

Figure 8: Receive FIFO Interface for Read command

When executing the Read command, the data is transferred from the SSD to the Receive FIFO until the entire data is transferred. The steps for transferring a burst of data are below.

1) Before starting a new burst transmission, the UserFifoWrCnt[15:6] is checked to ensure that there is enough free space in the Receive FIFO, which is indicated by the condition UserFifoWrCnt[15:6] ≠ all 1 or 1023. Also, the IP waits until the amount of received data from the SSD reaches at least 512 bytes. Once both conditions are satisfied, the new burst transmission begins.

2) The IP asserts UserFifoWrEn to 1b for 32 clock cycles to transfer 512-byte data from the Data buffer to the user logic.

3) After finishing transferring each 512-byte data, UserFifoWrEn is de-asserted to 0b for one clock cycle. If there is additional data remaining to be transferred, repeat steps 1) 3) until total data size matches the transfer size specified in the command.

4) After the total data is completely transferred, UserBusy is de-asserted to 0b.

IdenCtrl/IdenName

To ensure proper operation of the system, it is recommended to send the Identify command to the IP as the first command after the system boots up. This command updates important information about the SSD, such as its total capacity (LBASize) and LBA unit size (LBAMode), which are necessary for Write and Read commands to operate correctly. The following rules apply to the input parameters of these commands.

1) The sum of the address (UserAddr) and transfer length (UserLen), inputs of Write and Read command, must not exceed the total capacity (LBASize) of the SSD.

2) If LBAMode is 1b (LBA unit size is 4 KB), the three lower bit (bit[2:0]) of UserAddr and UserLen must be set to 0b to align with the 4 KB unit.

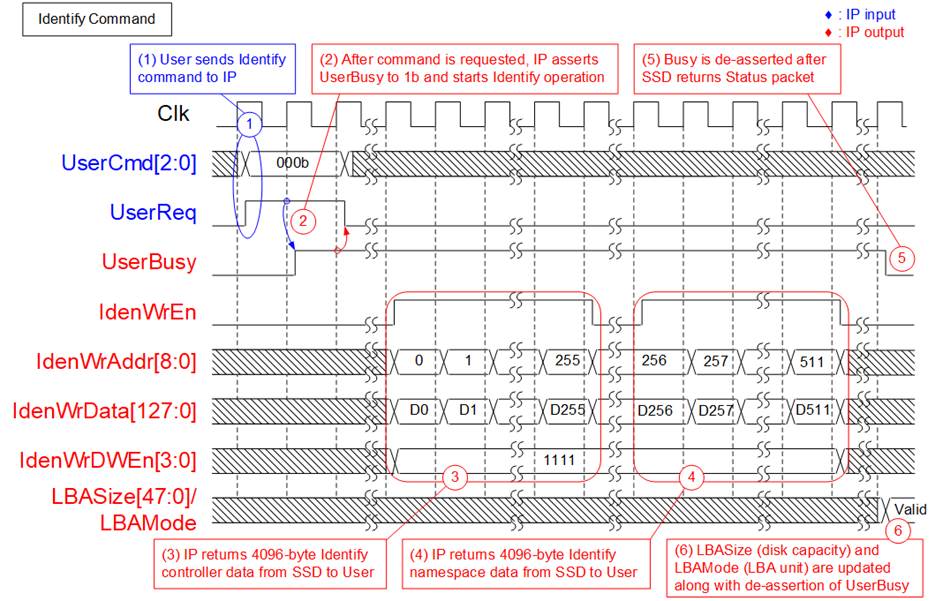

Figure 9: Identify command timing diagram

When executing the Identify command, the following steps are taken.

1) Send the Identify command to the IP (UserCmd=000b and UserReq=1b).

2) The IP asserts UserBusy to 1b after receiving the Identify command.

3) The IP returns 4KB Identify controller data to the user with IdenWrAddr equal to 0-255 and asserts IdenWrEn. IdenWrData and IdenWrDWEn are valid at the same clock as IdenWrEn=1b.

4) The IP returns 4KB Identify namespace data to the user with IdenWrAddr equal to 256-511. IdenWrAddr[8] can be used to determine the data type as Identify controller data or Identify namespace data.

5) UserBusy is de-asserted to 0b after finishing the Identify command.

6) The LBASize and LBAMode of the SSD are simultaneously updated with the values obtained from the Identify command.

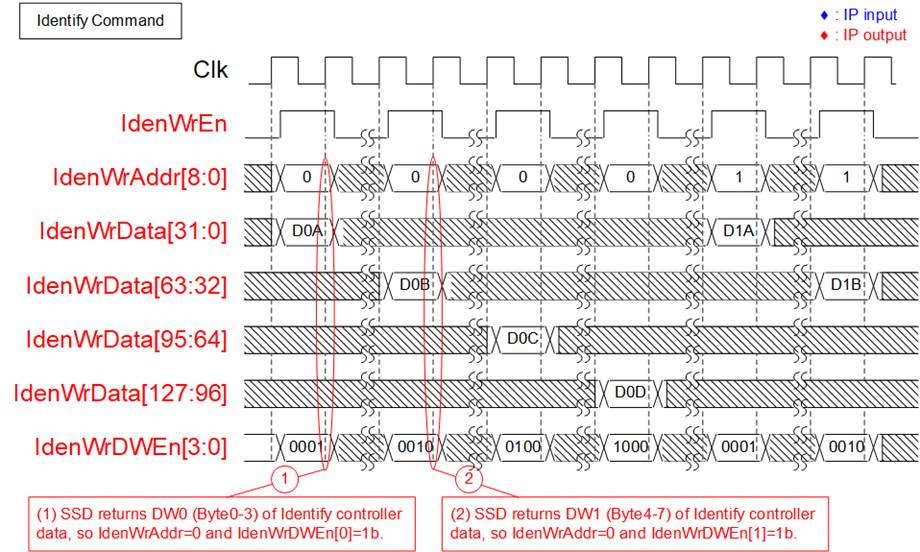

Figure 10: IdenWrDWEn timing diagram

The signal IdenWrDWEn is a 4-bit signal used to validate a 32-bit data signal. Some SSDs return the 4KB Identify controller data and Identify namespace data one word (32-bit) at a time instead of continuously. To forward 32-bit data, one bit of IdenWrDWEn is asserted to 1b in the write cycle, as illustrated in Figure 10. Each bit of IdenWrDWEn (IdenWrDWEn[0], [1], [2], and [3]) corresponds to each 32-bit data of IdenWrData (IdenWrData[31:0], [63:32], [95:64], and [127:96]).

Shutdown

The Shutdown command is a command that should be sent as the last command before the system is powered down. The SSD ensures that the data from its internal cache is written to the flash memory before the shutdown process finishes. After the shutdown operation is completed, the NVMe IP and the SSD become inactive status. If the SSD is powered down without executing the Shutdown command, the total count of unsafe shutdowns is increased, as returned data from the SMART command.

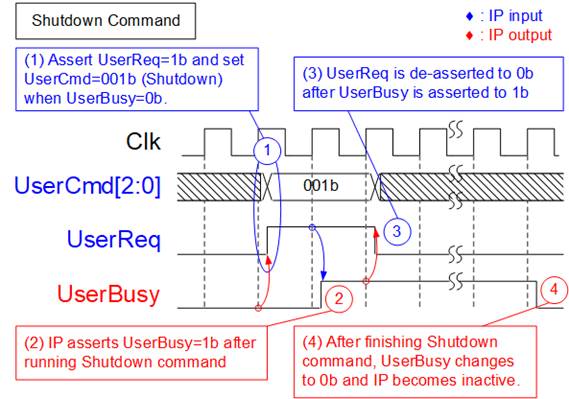

Figure 11: Shutdown command timing diagram

The process for executing the Shutdown command is described below.

1) Ensure that the IP is in an Idle state (UserBusy=0b) before sending the Shutdown command. The user must set UserReq=1b and UserCmd=001b to request the Shutdown command.

2) Once the NVMe IP runs the Shutdown command, UserBusy is asserted to 1b.

3) To clear the current request, UserReq is de-asserted to 0b after UserBusy is asserted to 1b.

4) UserBusy is de-asserted to 0b when the SSD is completely shut down. After the shutdown process is completed, the IP will not receive any further user commands.

SMART

The SMART command is the command to check the health of the SSD. When this command is sent, the SSD returns 512-byte health information. The SMART command parameters are loaded from the CtmSubmDW0-DW15 signals on the Custom command interface. The user must set the 16-dword data which is a constant value before asserting UserReq. Once the SMART data is returned, it can be accessed via the CtmRAM port, as shown in Figure 12.

Figure 12: SMART command timing diagram

Below are the details of how to run the SMART command.

1) The NVMe IP must be Idle (UserBusy=0b) before sending the command request. All input parameters must be stable when UserReq is asserted to 1b for sending the request. The CtmSubmDW0-DW15 is set as a constant value for the SMART command by following values.

CtmSubmDW0 = 0x0000_0002

CtmSubmDW1 = 0xFFFF_FFFF

CtmSubmDW2 CtmSubmDW5 = 0x0000_0000

CtmSubmDW6 = 0x2000_0000

CtmSubmDW7 CtmSubmDW9 = 0x0000_0000

CtmSubmDW10 = 0x007F_0002

CtmSubmDW11 CtmSubmDW15 = 0x0000_0000

2) UserBusy is asserted to 1b after the NVMe IP executes the SMART command.

3) UserReq is de-asserted to 0b to clear the current request. Next, user logic can change the input parameters for the next command request.

4) 512-byte SMART data is returned on CtmRamWrData signal with asserting CtmRamWrEn to 1b. CtmRamAddr is equal to 0-31 to be data index of 512-byte data. When CtmRamAddr=0, byte0-15 of SMART data is valid on CtmRamWrData. CtmRamWrDWEn is Dword enable for each 32-bit CtmRamWrData. If CtmRamWrDWEn=1111b, all 128 bits of CtmRamWrData are valid.

5) UserBusy is de-asserted to 0b when finishing SMART command.

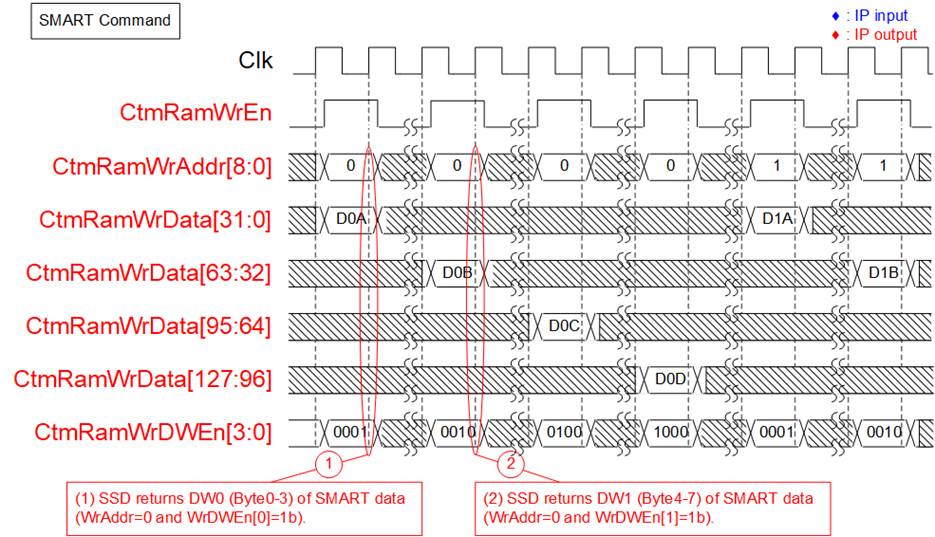

Figure 13: CtmRamWrDWEn timing diagram

Similar to Identify command, some SSDs returns only one Dword (32-bit) of data at a time instead of 512-byte data continuously. In such cases, one bit of CtmRamWrDWEn is asserted to 1b in the write cycle to be the valid signal of 32-bit CtmRamWrData. Each bit of CtmRamWrDWEn (bit[0], [1], [2], and [3]) corresponds to each 32 bits of CtmRamWrData (bit[31:0], [63:32], [95:64], and [127:96]).

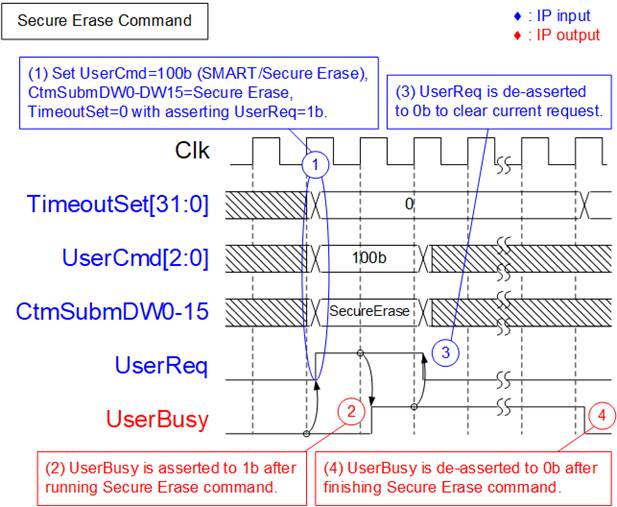

Secure Erase

Secure Erase is a command that erases all user data in the SSD. After the Secure Erase command is executed, the contents of the user data are indeterminate. Since executing this command may require long time for operation, users need to disable timer of the IP by setting TimeoutSet signal to zero value.

Figure 14: Secure Erase command timing diagram

Below are the details of how to run the Secure Erase command.

1) The IP must be in an Idle state (UserBusy=0b) before sending the command request. All input parameters must be stable when UserReq is asserted to 1b to send the request. TimeoutSet and CtmSubmDW0-DW15 are set as a constant value for the Secure Erase command by following values.

TimeoutSet = 0x0000_0000 (Disable Timer)

CtmSubmDW0 = 0x0000_0080

CtmSubmDW1 = 0x0000_0001

CtmSubmDW2 CtmSubmDW9 = 0x0000_0000

CtmSubmDW10 = 0x0000_0200

CtmSubmDW11 CtmSubmDW15 = 0x0000_0000

2) After the NVMe IP executes the Secure Erase command, UserBusy is asserted to 1b.

3) UserReq is then de-asserted to 0b to clear the current request, the user logic can change the input parameters for the next command request.

4) UserBusy is de-asserted to 0b when the Secure Erase command is completed. After finishing the operation, the TimeoutSet can be changed to other values to enable Timeout function of the IP.

Note: Some SSDs may experience a decrease in performance after long data transfer. For such SSDs, the Secure Erase command can help restore their performance.

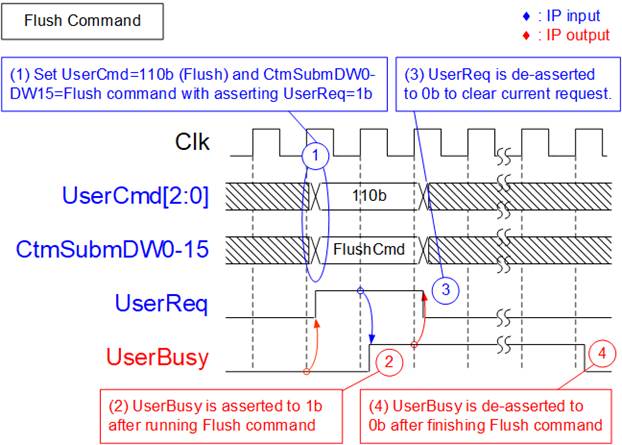

Flush

Figure 15: Flush command timing diagram

To execute the Flush command, the following details should be mentioned.

1) The IP must be Idle (UserBusy=0b) before sending the command request, and all input parameters must be stable when UserReq is asserted to 1b for sending the request. The CtmSubmDW0-DW15 is set as a constant value with the following values for Flush command.

CtmSubmDW0 = 0x0000_0000

CtmSubmDW1 = 0x0000_0001

CtmSubmDW2 CtmSubmDW15 = 0x0000_0000

2) UserBusy is asserted to 1b after the NVMe IP executes the Flush command.

3) UserReq is de-asserted to 0b to clear the current request, and the user logic can change the input parameters for the next command request.

4) UserBusy is de-asserted to 0b when the Flush command is completed.

Using the Flush command ensures that all data from the previous Write command is guaranteed to be stored in flash memory, thus preventing data loss in the event of unexpected power loss.

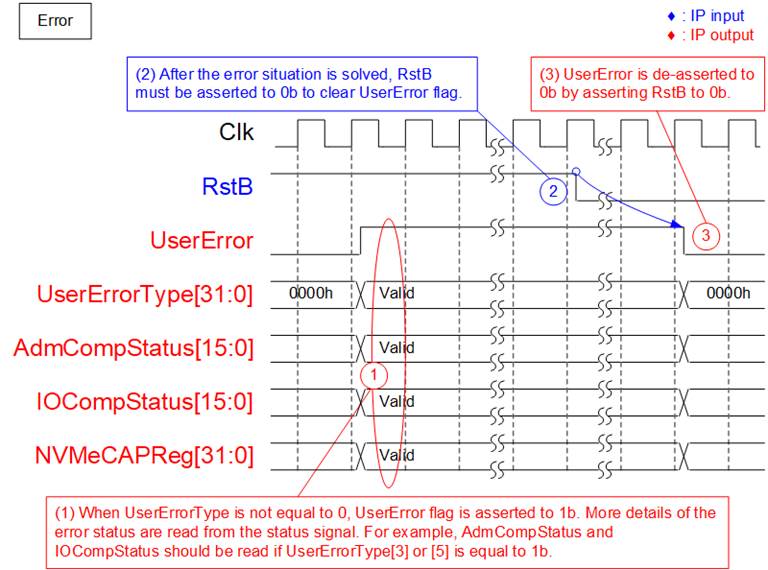

Error

Figure 16: Error flag timing diagram

If an error occurs during the initialization process or when running some commands, the UserError flag is set to 1b. To check the type of error, the UserErrorType should be read. The NVMeCAPReg, AdmCompStatus, and IOCompStatus signals can be used to monitor the error details after UserError is set to 1b.

If an error occurs during the initialization process, it is recommended to read the NVMeCAPReg signal to check the capabilities of the NVMe SSD. If an error occurs while operating a command, it is recommended to read the AdmCompStatus and IOCompStatus signals.

- If bit[3] of UserErrorType is asserted, the AdmCompStatus signal should be checked for more details.

- If bit[5] of UserErrorType is asserted, the IOCompStatus signal should be checked for more details.

The UserError flag is cleared only by the RstB signal. After the failure is resolved, RstB is asserted to 0b to clear the error flag.

Verification Methods

The NVMe IP Core functionality was verified by simulation and also proved on real board design by using AC701/KC705/VC707/VC709/ZC706/Zynq Mini-ITX/KCU105/ZCU106/ZCU111/VCU118/VCU128 evaluation board.

Recommended Design Experience

Experience design engineers with a knowledge of Vivado Tools should easily integrate this IP into their design.

Ordering Information

This product is available directly from Design Gateway Co., Ltd. Please contact Design Gateway Co., Ltd. for pricing and additional information about this product using the contact information on the front page of this datasheet.

Revision History

|

Revision |

Date |

Description |

|

3.09 |

12-Dec-23 |

Update resource utilization and support Secure Erase command |

|

3.08 |

10-May-23 |

Support ZCU111 and VCU128 and update timing diagram of Read Command |

|

3.07 |

12-Oct-20 |

Correct information |

|

3.06 |

28-Aug-20 |

Add KCU116 support and update company info |

|

3.05 |

26-Jun-20 |

Add alternative IP description |

|

3.04 |

28-Jan-20 |

Add AB17-M2FMC adaptor board support for KCU105 and ZCU106 |

|

3.03 |

2-May-19 |

Add AB18-PCIeX16 support |

|

3.02 |

27-Nov-18 |

Add AC701 support |

|

3.01 |

10-Oct-18 |

Support Memory Write Request which is not aligned to 128-bit unit |

|

3.00 |

19-July-18 |

Support Shutdown, SMART, and Flush command |

|

2.01 |

30-Nov-17 |

Support ZCU106 board |

|

2.00 |

7-Jun-17 |

Change PCIe interface to connect to PCIe hard IP |

|

1.05 |

9-Dec-16 |

Modify buffer to be BRAM and modify user interface to dgIF typeS |

|

1.04 |

27-Oct-16 |

Support VC709 and Zynq Mini-ITX |

|

1.03 |

9-Sep-16 |

Add KC705 support |

|

1.02 |

5-Sep-16 |

Add ZC706 support |

|

1.01 |

15-Jun-16 |

Add KCU105 support |

|

1.00 |

2-Jun-16 |

Initial Release |