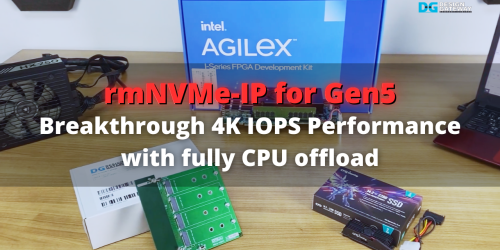

rmNVMe-IP (Random Access & Multi User NVMe IP) : Demo & Performance

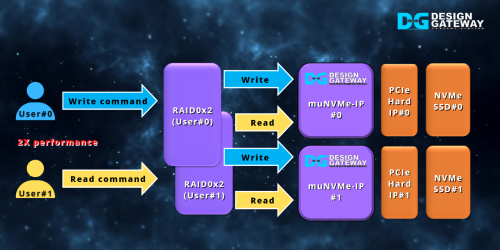

rmNVMe-IP (Random Access & Multi User NVMe IP) is very high performance NVMe Host Controller which is highly optimized for high-IOPS random access applications. rmNVMe-IP supports multiple user interfaces, each user can simultaneously read/write to a single NVMe SSD at the same time.

This is the demo & performance to show you how to setup and run our demo to see the performance of rmNVMe-IP Core and SSD.

rmNVMe-IP Core consist of 3 parts

Let’s see how to set up the demo and run it to check performance.

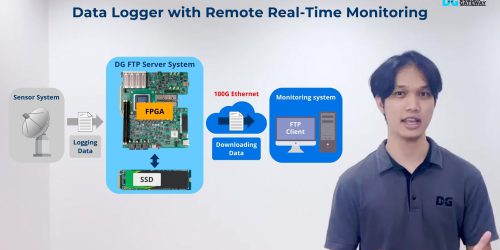

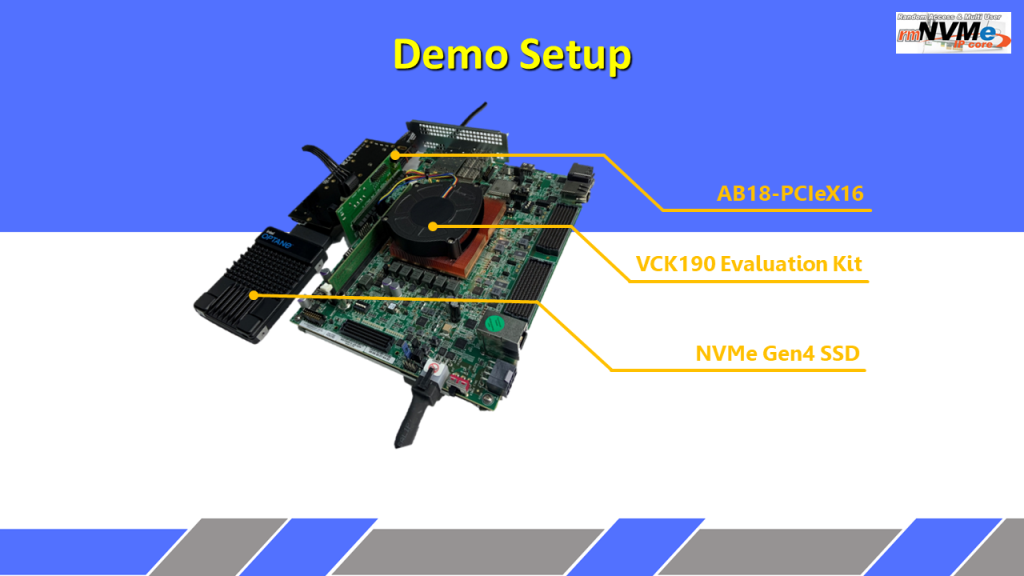

To set up the hardware set for running the demo of rmNVMe-IP for Gen4, it consists of 3 components.

- Versal VCK190 Evaluation Kit.

- AB18 adapter board.

- NVMe Gen4 SSD.

The AB18 adapter connects the NVMe Gen4 SSD to the VCK190 board via the PCIe connector.

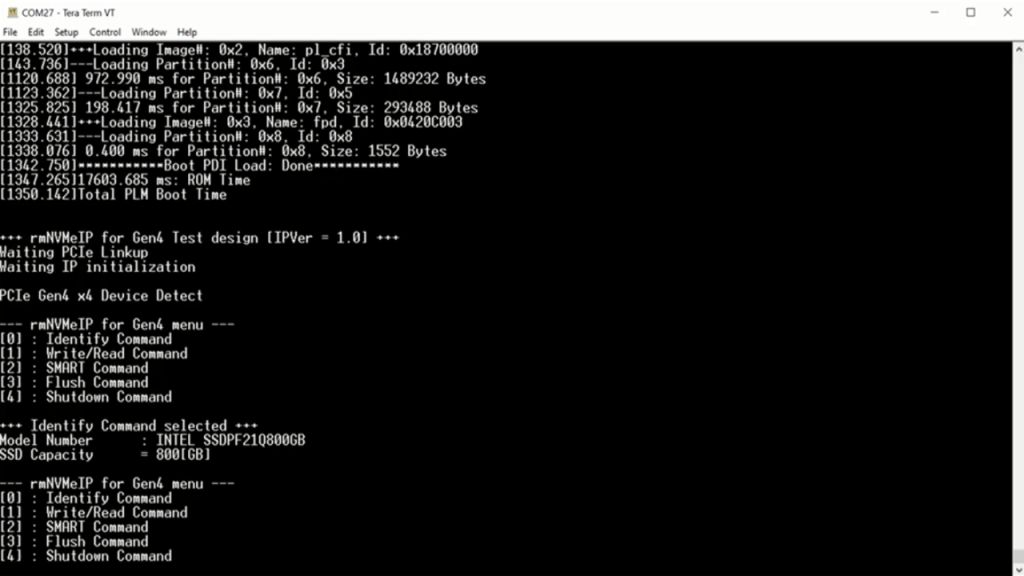

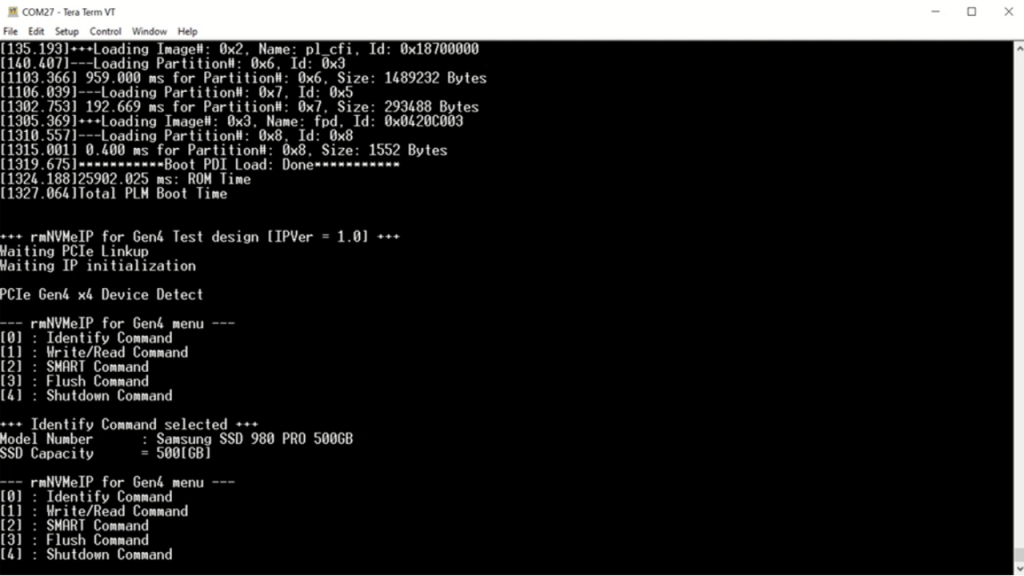

Next, we’ll demonstrate how to write and read data with the SSD using the console.

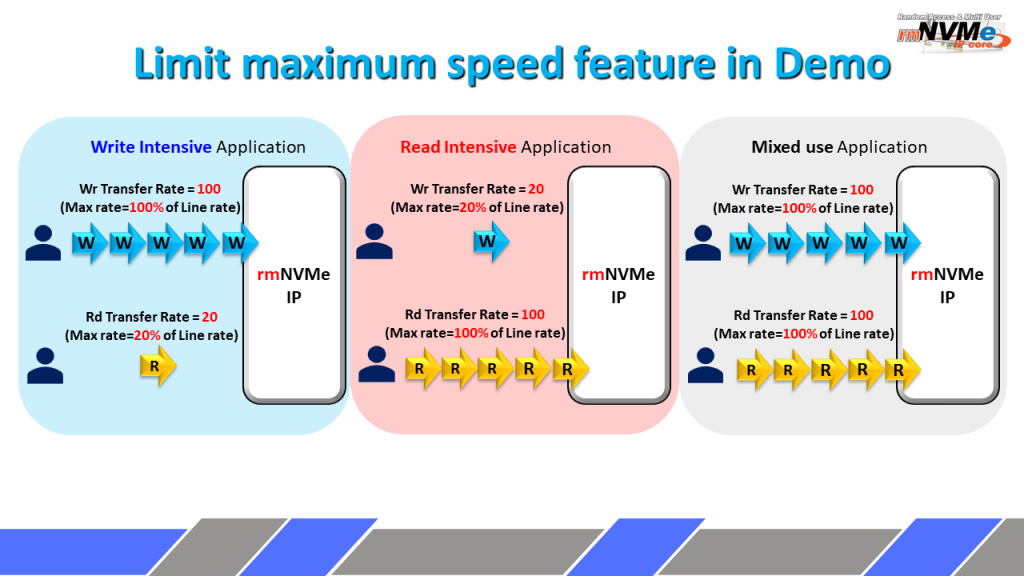

The rmNVMe-IP demo features “Transfer rate,” allowing the user to set maximum data transfer rates for writing and reading on the NVMe SSD, expressed as a percentage of the maximum data rate. When set to 100%, the data transfer rate is at its maximum.

For evaluating SSD performance in Write-intensive, Read-intensive, or Mixed-use applications, the transfer rate is adjusted on the Write and Read interface.

In Write-intensive apps, the write transfer rate is set to 100%, while a lower rate, such as 20%, is set for reading to limit the read workload.

For Read-intensive apps, the read transfer rate is set to 100% for optimal performance and the write rate to a lower value such as 20%.

Both write and read transfer rates are set to 100% for Mixed-use apps to achieve maximum performance.

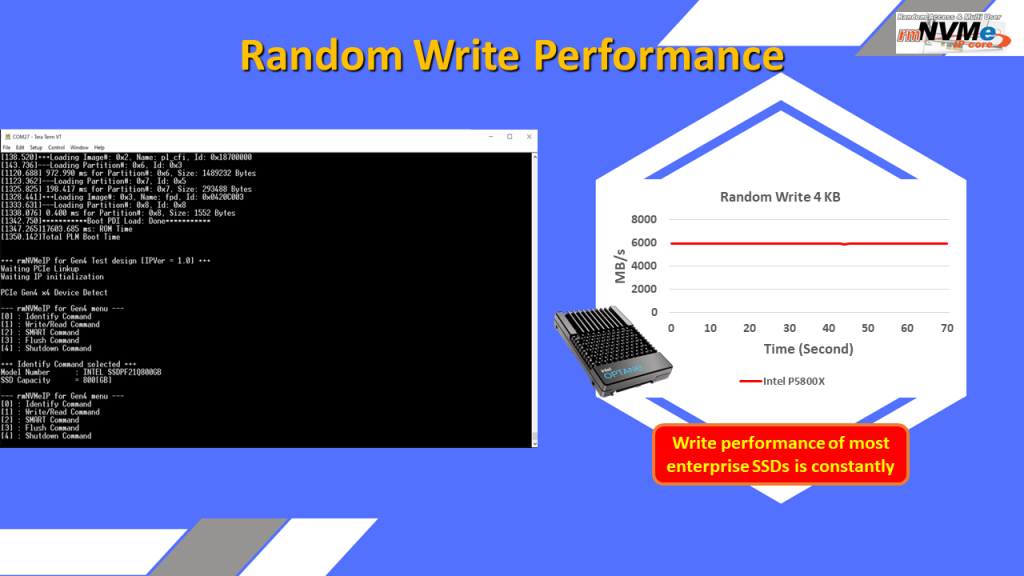

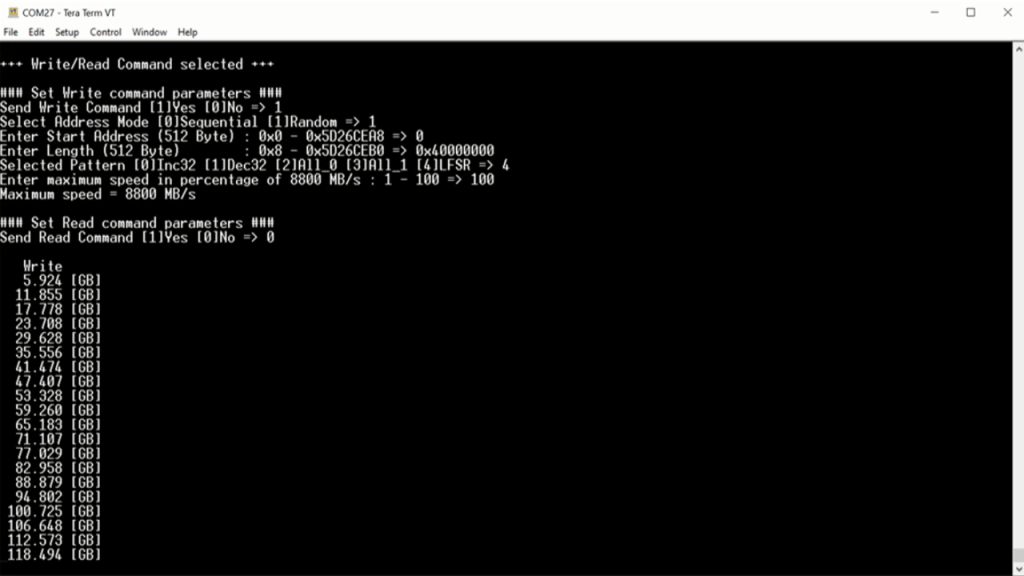

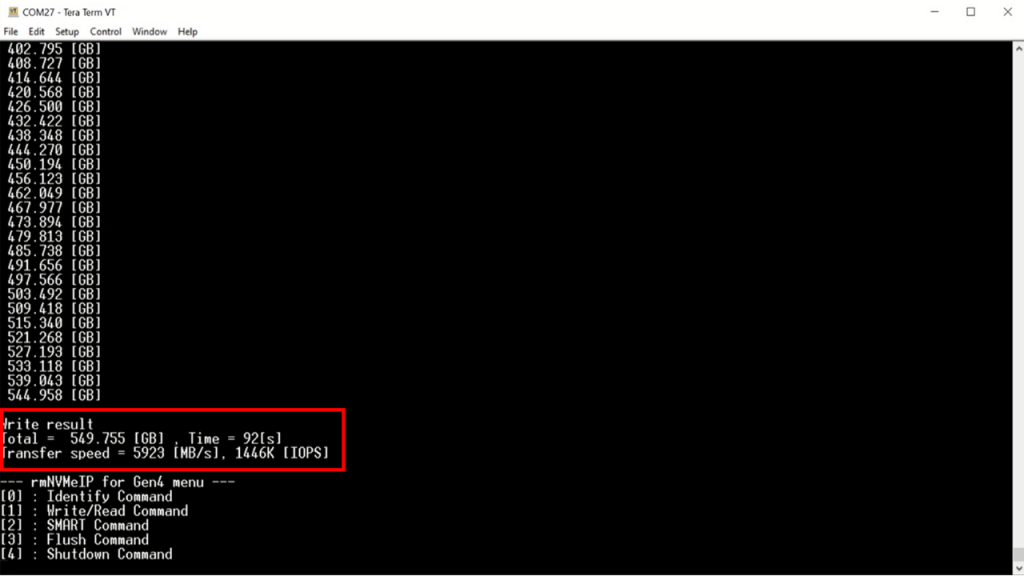

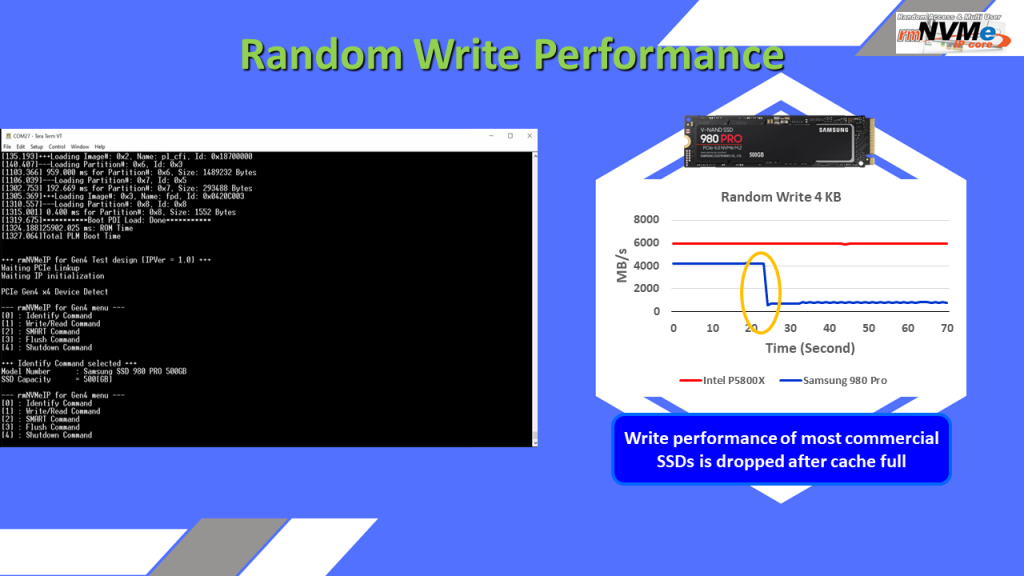

First, let’s see the Write command with random access demo with two SSDs, enterprise SSD and commercial SSD.

First, Intel P5800X which is an enterprise SSD is demonstrated by using 4Kbyte random write to transfer 550-Gbyte data. The write performance is the very good, with constant speed at 5900 Mbytes/sec.

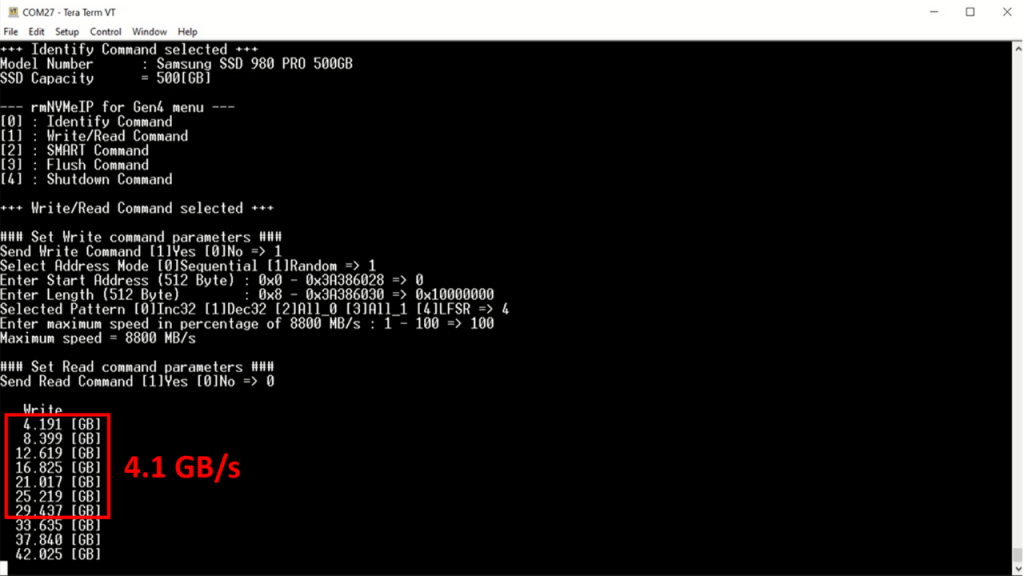

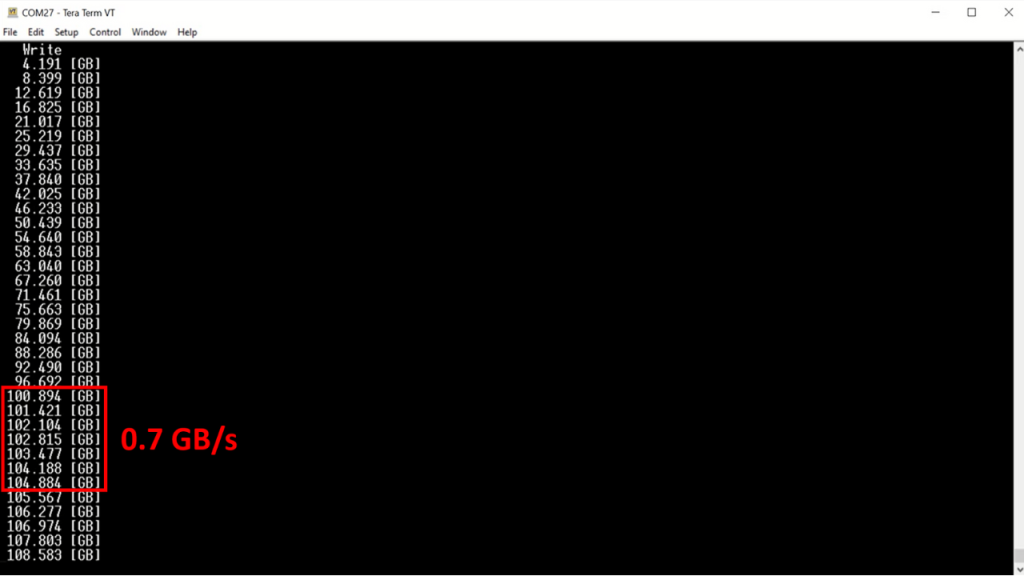

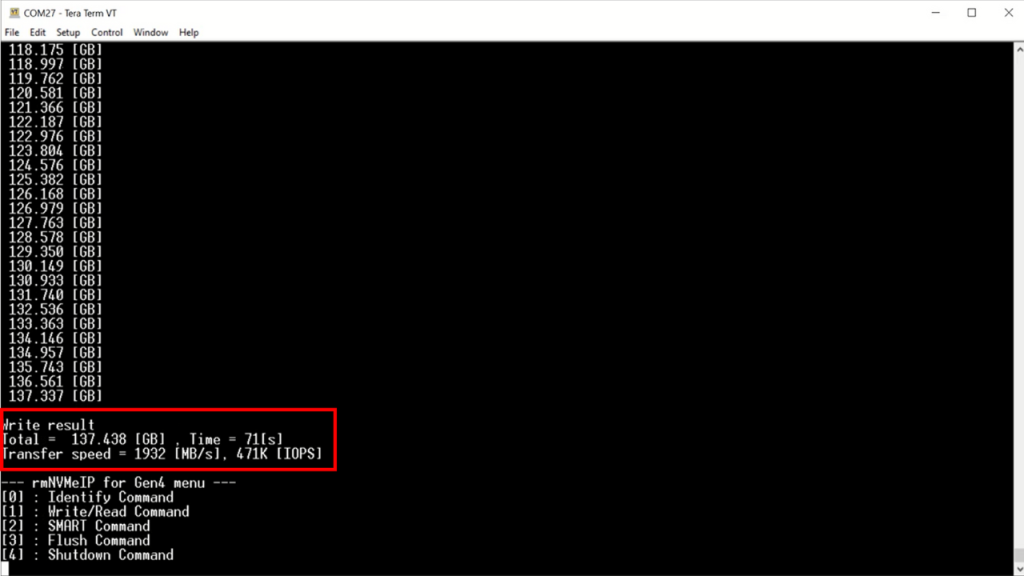

Next, the commercial SSD – Samsung 980 Pro is tested using the same condition, 4Kbyte random write for large data size.

After 100 GB of data is written, the write speed drops from 4200 Mbytes/sec to 700 Mbytes/sec. This is a common characteristic of commercial SSDs when the cache is full. It is important to consider when choosing an SSD that meets system requirements.

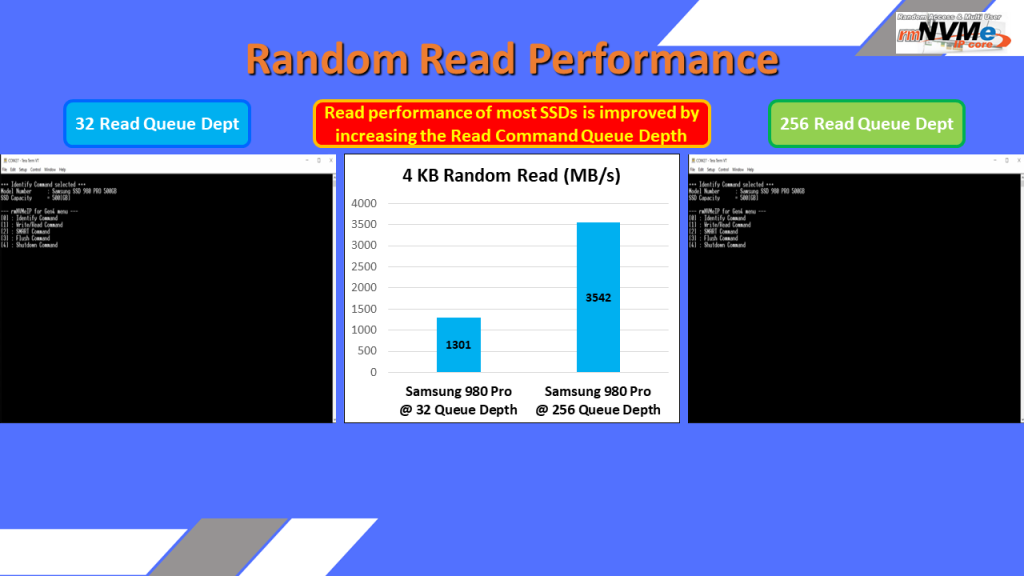

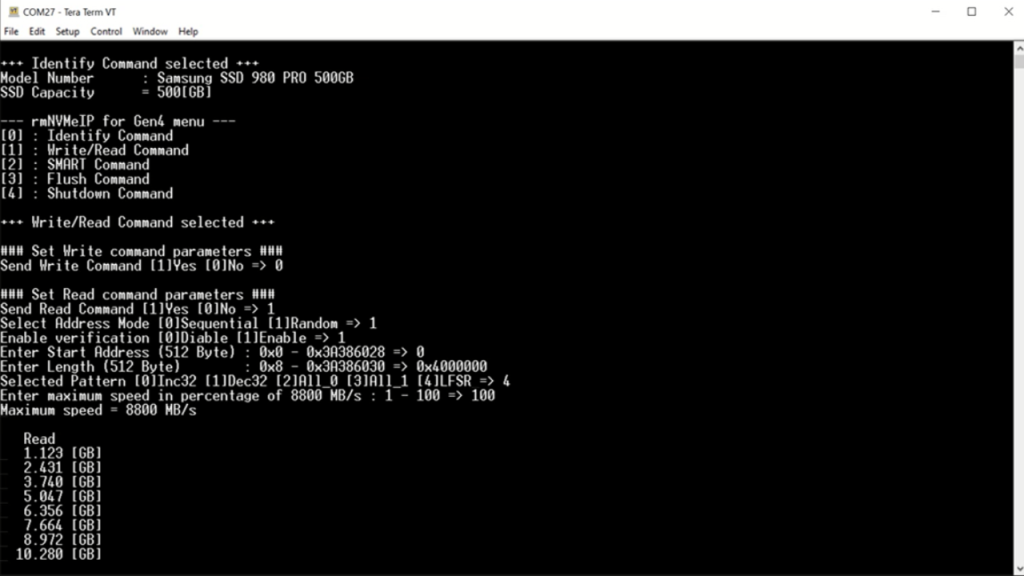

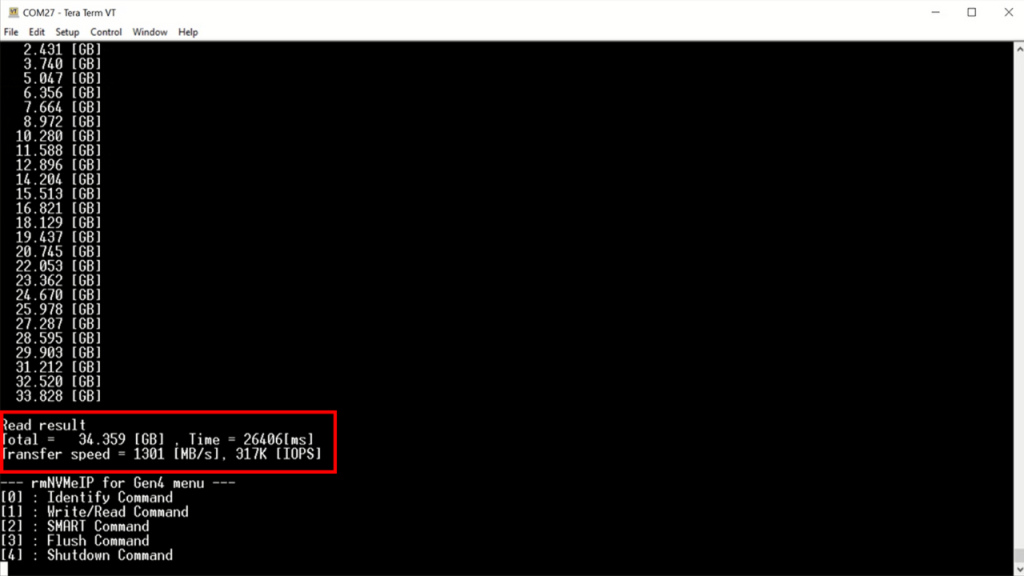

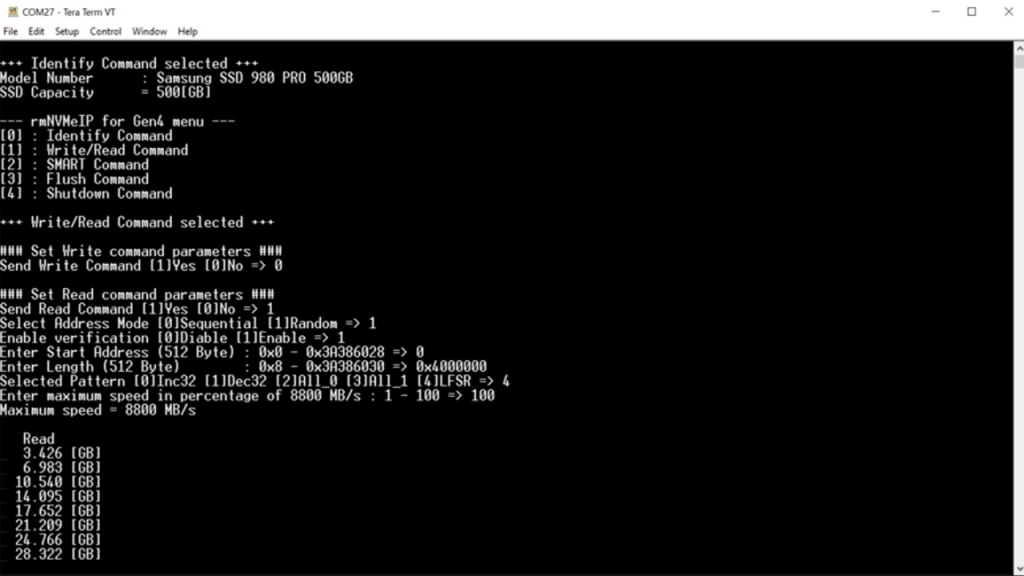

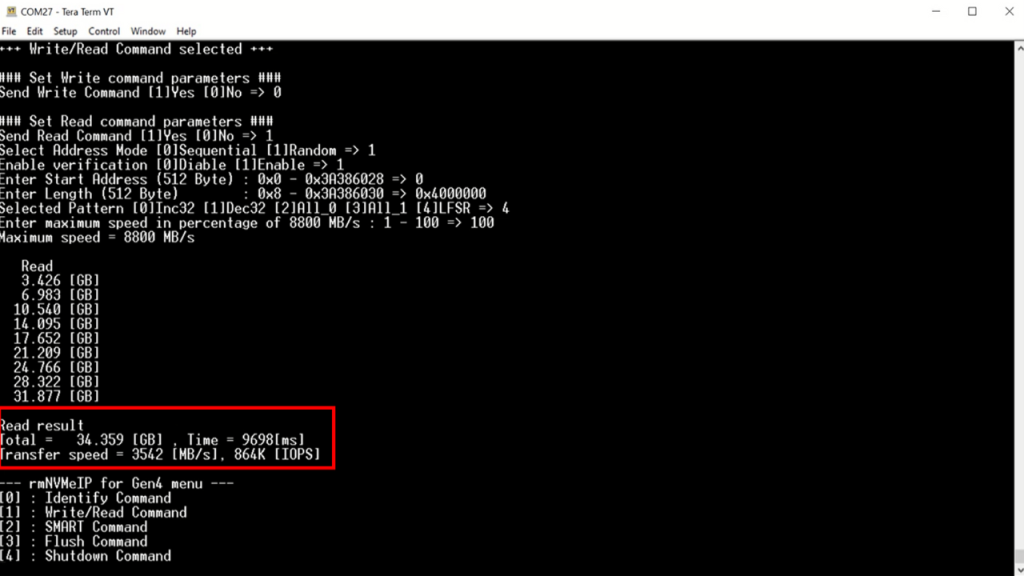

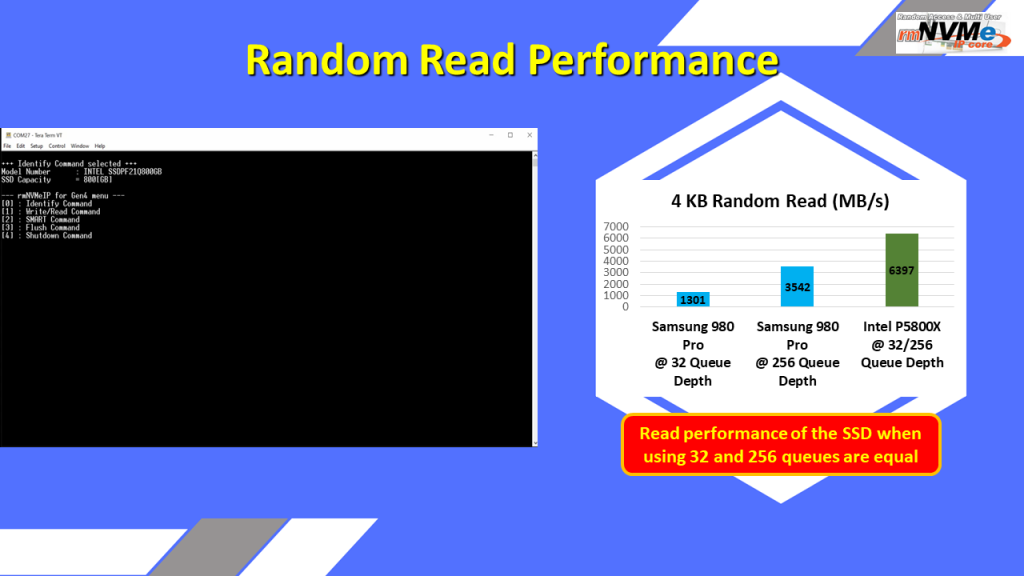

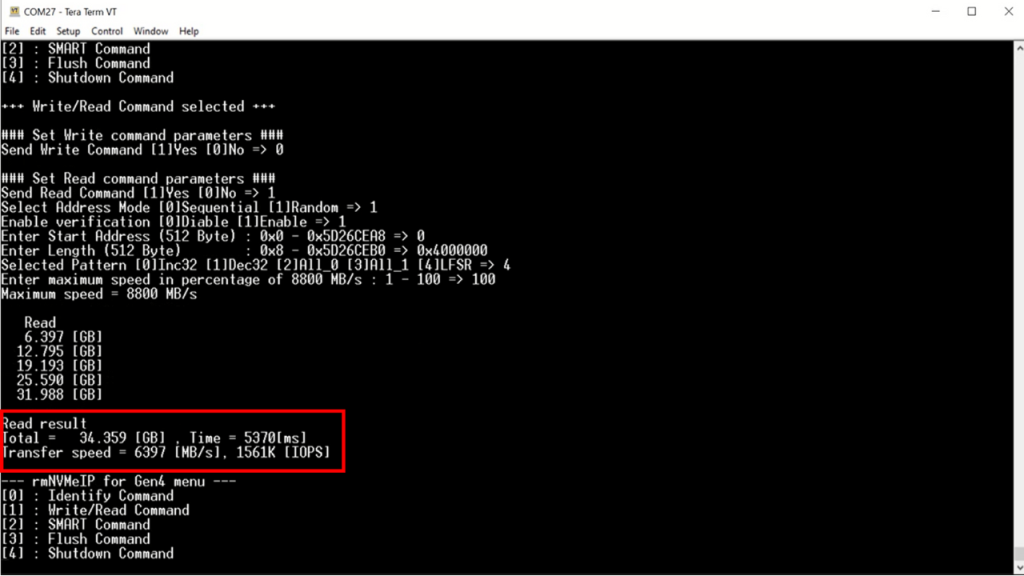

Next, we’ll demonstrate the Read command with random access under different test conditions.

The results of using the commercial Samsung 980 Pro SSD with command queue depths of 32 and 256 will be shown. The average read speed with 32 queues is 1301 Mbytes/sec and with 256 queues it’s 3542 Mbytes/sec.

This shows that the read speed improves with a larger command queue dept.

When using the enterprise SSD, Intel P5800X, the read speed is about 6397 Mbytes/sec for both 32 and 256 queue depth.

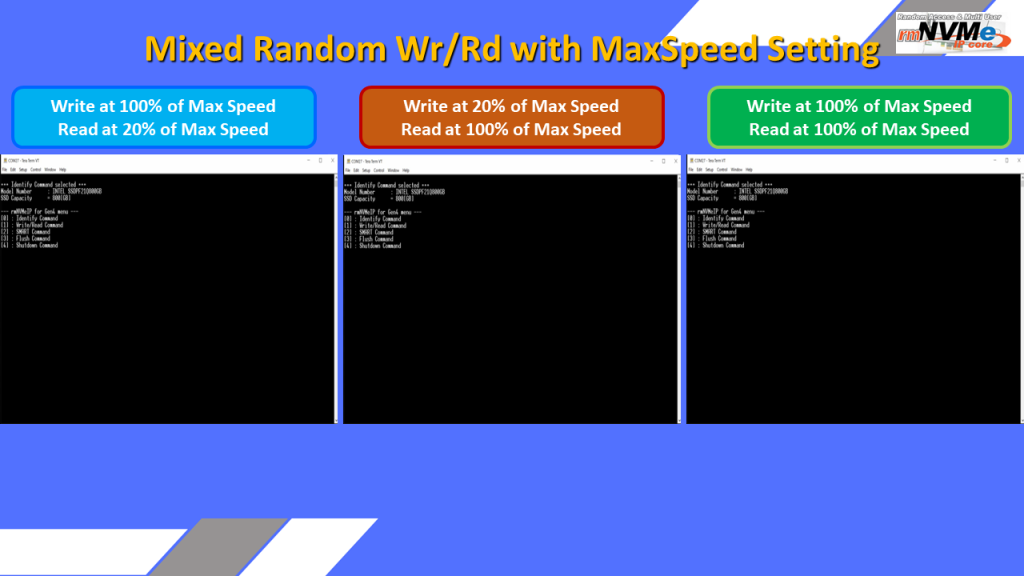

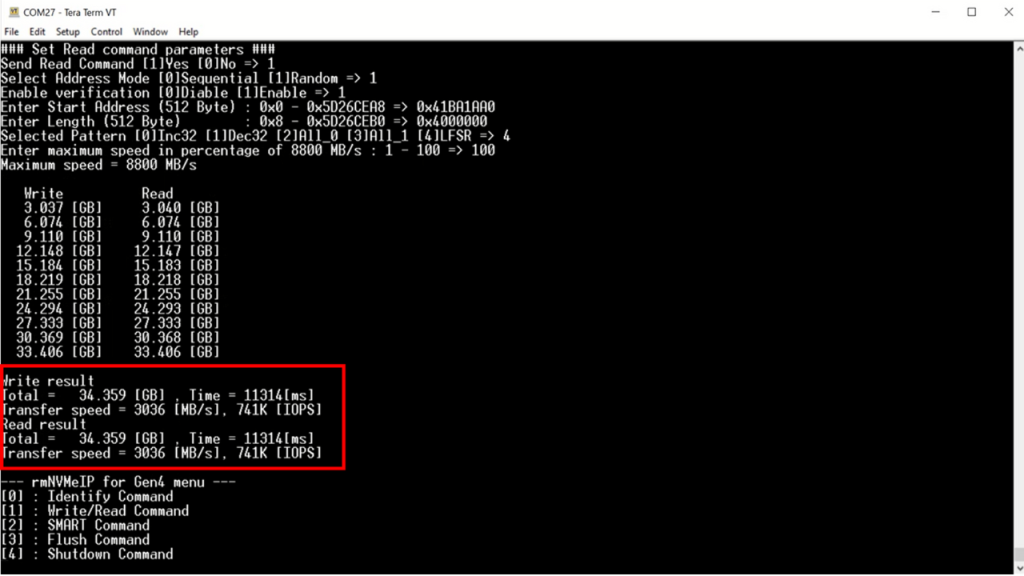

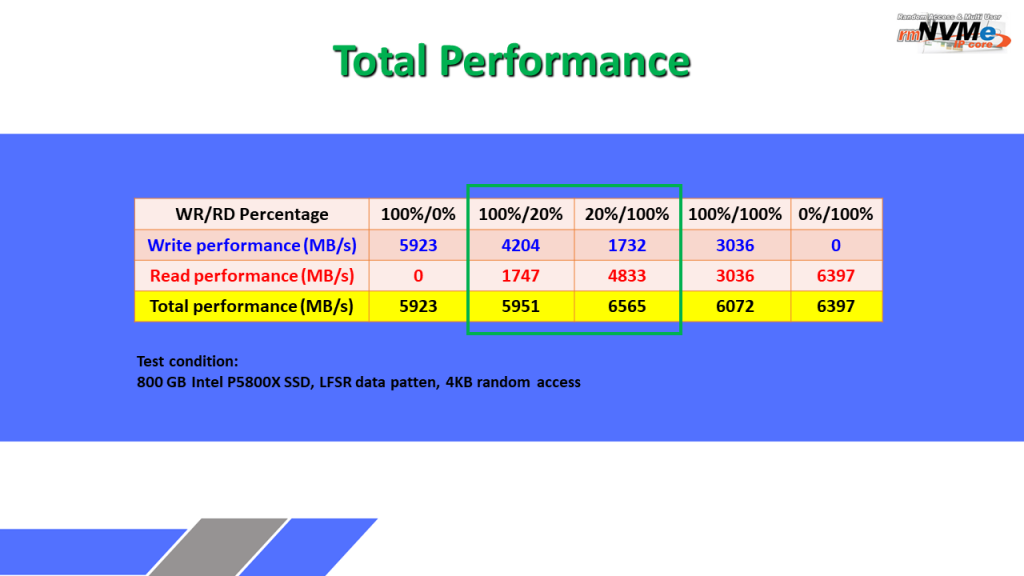

Finally, let’s see the demo when running the Write and Read command simultaneously by using Intel P5800X SSD.

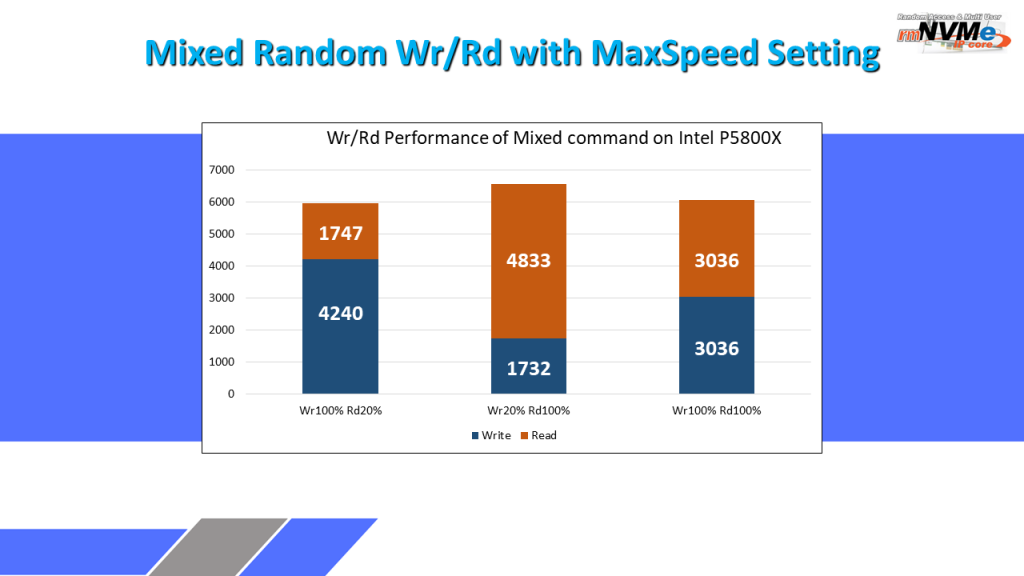

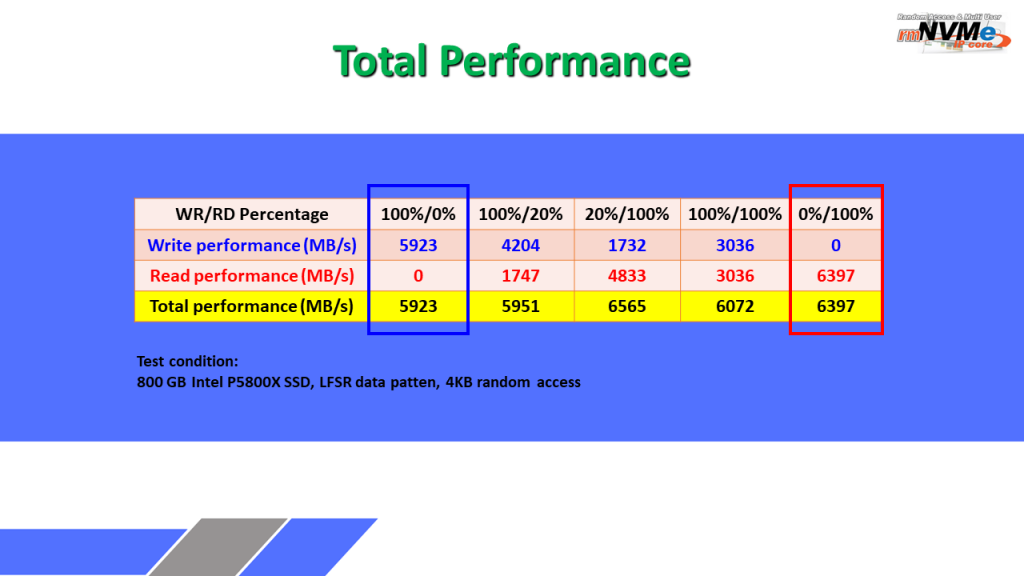

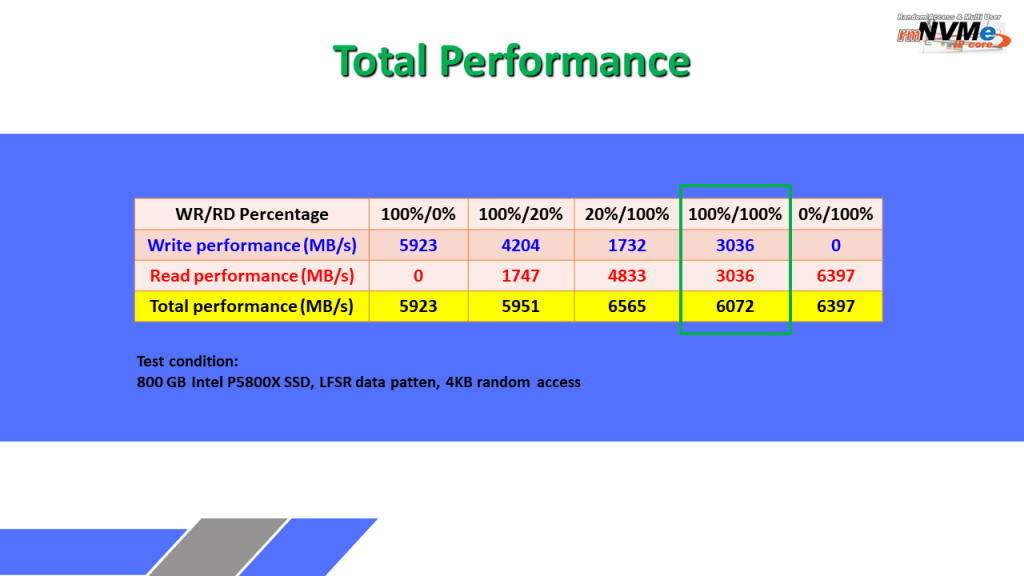

Three conditions with different settings of Write/Read loading are demonstrated.

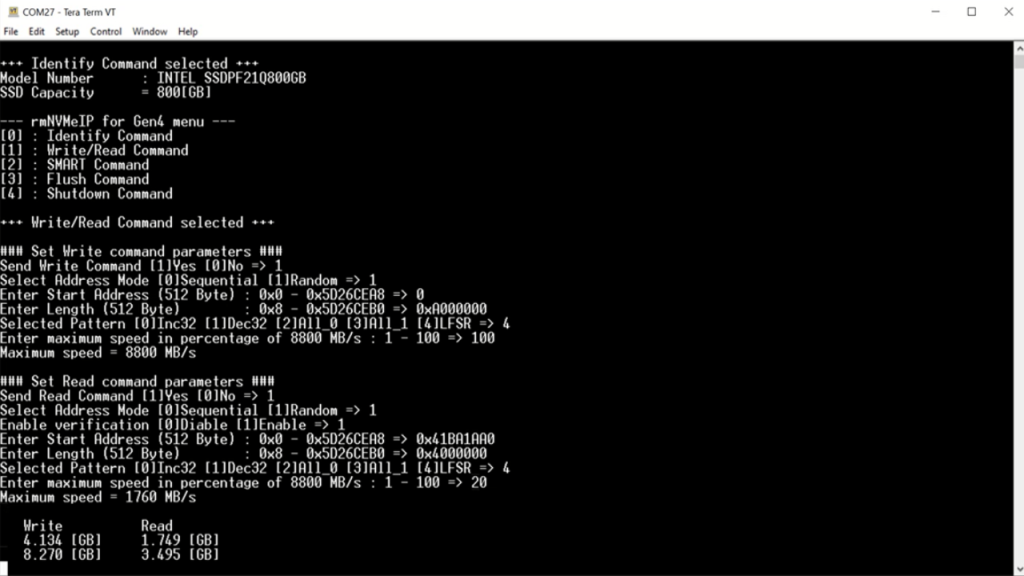

First, the read speed is limited to 20% of the maximum line rate.

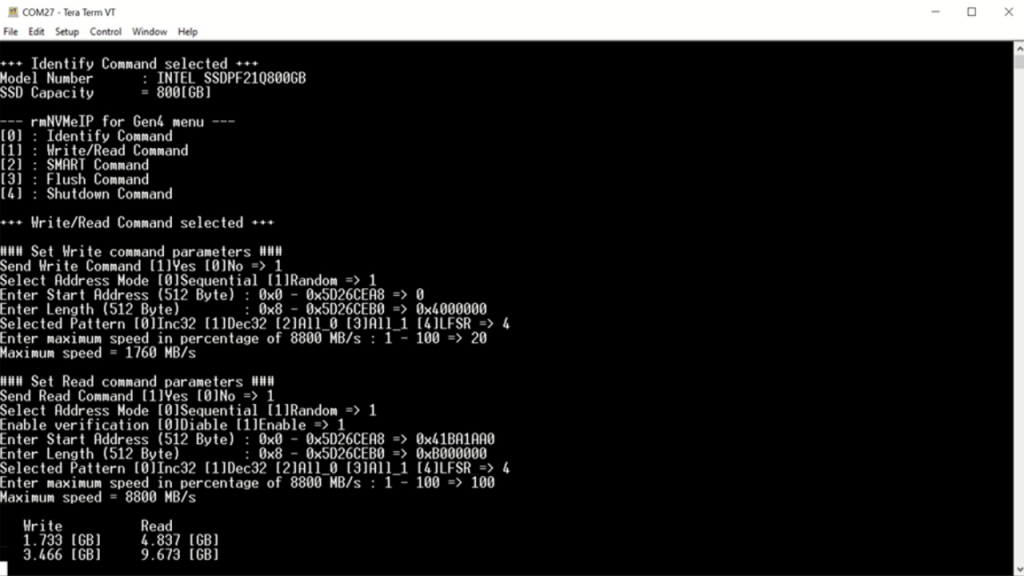

Second, in contrast, the write speed is limited to 20% of the maximum line rate.

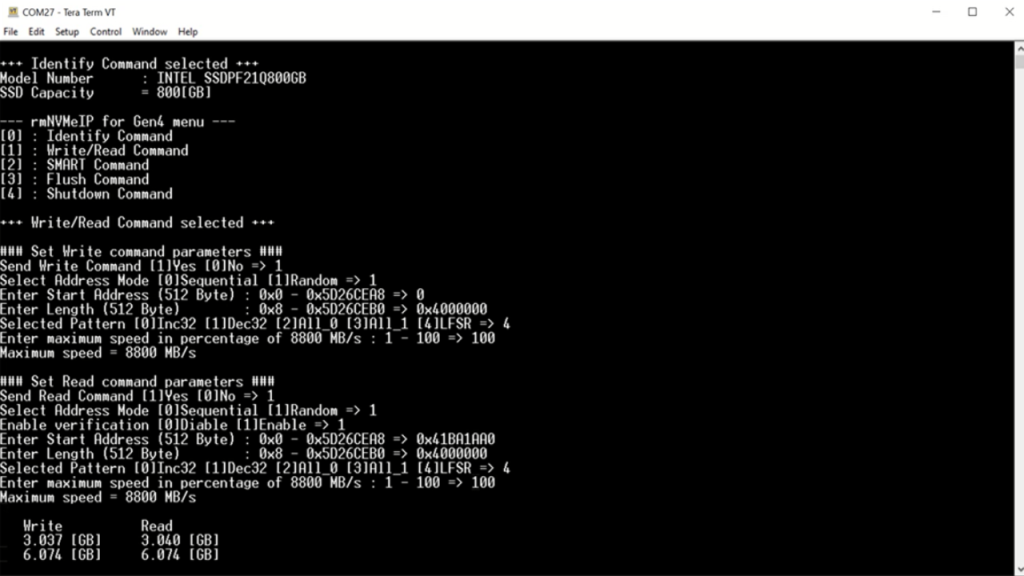

Last, no limit is set to both write and read transfer.

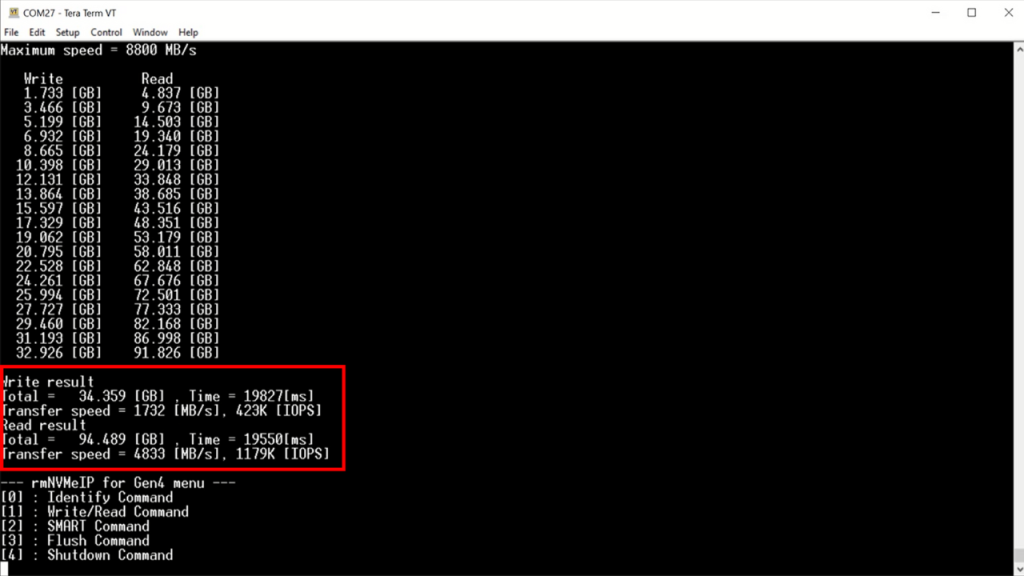

As shown in the comparison graph, the total performance that is the sum of the write speed and the read speed of the three conditions are not much different.

Total performance is about 6000 – 6500 Mbytes/sec. The maximum line rate is equal to 8800 Mbytes/sec, so 20% means 1760 Mbytes/sec.

For the first test that limits the read speed to 1747 Mbytes/sec, the write speed is equal to the rest bandwidth of SSD or 4204 Mbytes/sec.

For the second test, the write speed is limited to 1732 Mbytes/sec while the read speed shows 4833 Mbytes/s.

Last, the write and read speed are both equal to 3036 Mbytes/sec.

The Intel P5800X demonstrates a well-balanced performance for both write and read operations.

When only write or read commands are executed, the read speed exceeds the write speed.

However, when both write and read commands are set at the same rate, the performance of both write and read becomes equal.

As a result, write-sensitive systems should reduce the number of read command requests to the rmNVMe-IP, while read-intensive systems should minimize the number of write command requests.

It should be noted that some SSDs may exhibit different and unbalanced write-read performance when both commands are run simultaneously.

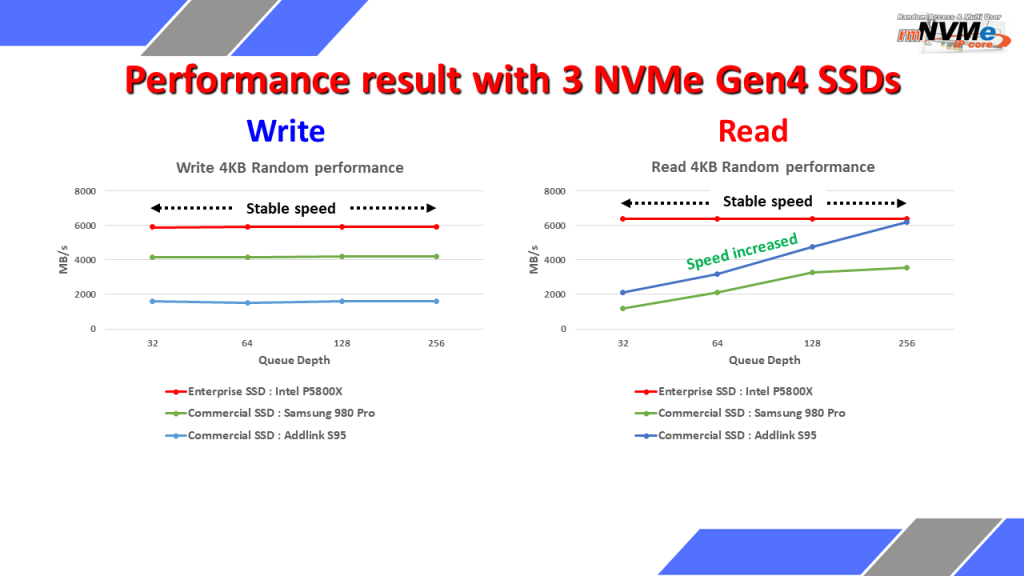

Our demo is available for use to assess the characteristics of your own SSD. You can see the results of three SSDs are displayed in a graph when operating 4KB random write and read tests using rmNVMe-IP.

The graph shows that three SSDs exhibit a constant rate for the Write command while adjusting the Write command queue depth to values of 32, 64, 128, and 256. Two SSDs display an increased read speed when the Read command queue depth is set to a larger value.

However, the Intel P5800X exhibits a constant rate with the highest performance during random access tests of 4 Kbyte size for both write and read operations.

So, rmNVMe-IP is designed to operate as fast as possible at PCIe Gen4 speed, but the performance result mainly depended on SSD’s characteristic.

Our demo could help you to evaluate the best SSDs for your application.

Hope you this third part can help you understand our demo and performance result of rmNVMe-IP Core with NVMe Gen4 SSD in real world applications.

For more technical detail, please visit our website.

https://dgway.com/rmNVMe-IP_X_E.html

https://dgway.com/rmNVMe-IP_A_E.html