rmNVMe-IP (Random Access & Multi User NVMe IP) : Application

rmNVMe-IP (Random Access & Multi User NVMe IP) is very high performance NVMe Host Controller which is highly optimized for high-IOPS random access applications. rmNVMe-IP supports multiple user interfaces, each user can simultaneously read/write to a single NVMe SSD at the same time.

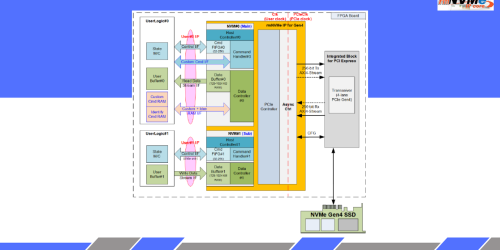

This is the example application of rmNVMe-IP Core.

rmNVMe-IP Core consist of 3 parts

Please check the first part for more understanding.

Let’s see the example applications that can be accelerated performance by rmNVMe-IP core.

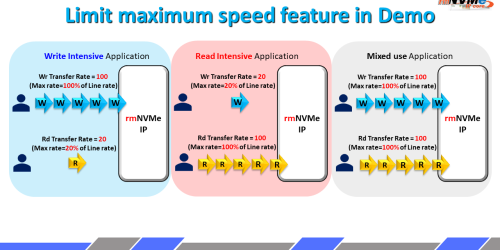

First, the application is a Write or Read Intensive Application.

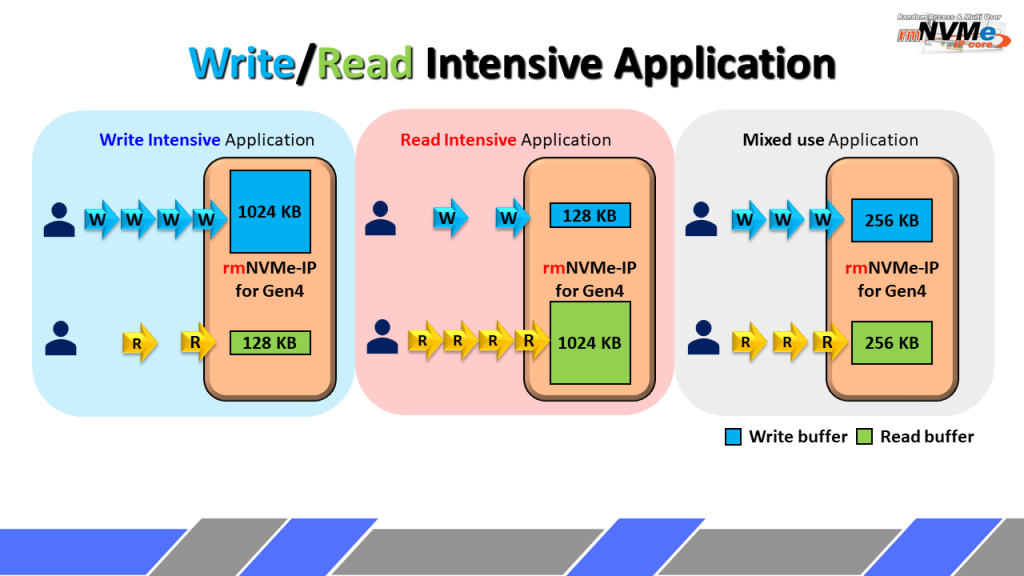

For write-intensive applications, the write buffer size is typically set to a large value while the read buffer size is kept small to optimize resources.

However, for read-intensive applications, the read buffer size is increased to prioritize high read performance.

rmNVMe-IP support configurable buffer size for both write and read to achieve optimum performance in your application.

In the case of mixed-use applications, a moderate size is applied to both the write and read buffers to achieve a balanced read and write performance.

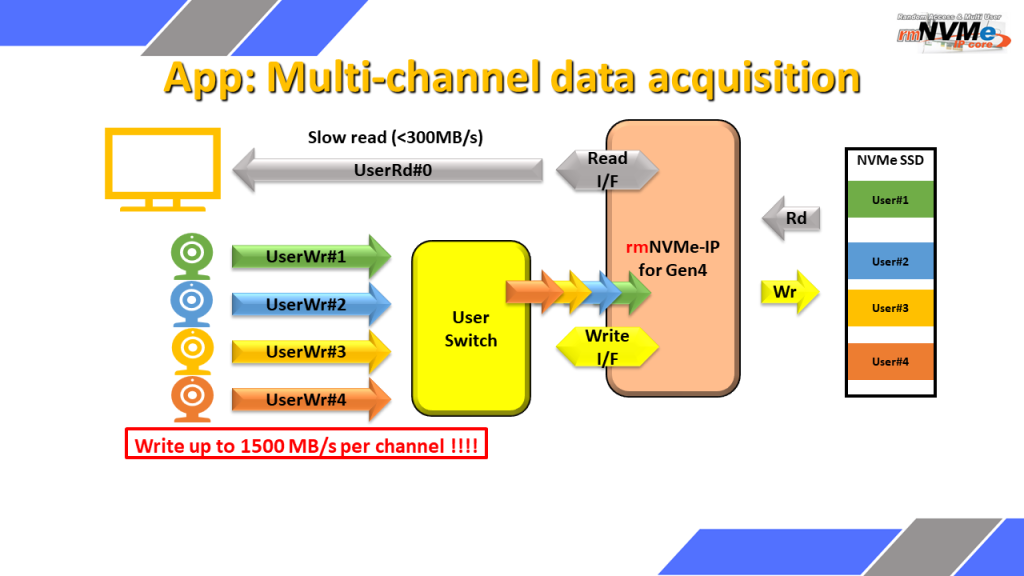

Second, the application is Multi-channel data acquisition.

The key feature of rmNVMe-IP is allowed to execute multiple read and write commands simultaneously.

rmNVMe-IP can be adapted to the system that requires multiple user access to a single SSD simultaneously at maximum possible performance without CPU invention.

One example of such kind of a system is a Multi-channel data acquisition application. This presentation shows an example scenario for 4 high-speed write users and 1 slow speed read user.

The system integrates multiple data sources through switch logic. This switch logic divides the data received from the different sources into 4 KB blocks and sends multiple 4 KB write requests to rmNVMe-IP. The read command is periodically requested at a slower speed to maintain system monitoring.

However, the read speed is deliberately limited to preserve high performance in the write interface.

As a result, write speeds of 6000 Mbytes/sec or 1500 Mbytes/sec per channel for four users can be achieved when the read speed is less than 300 Mbytes/sec.

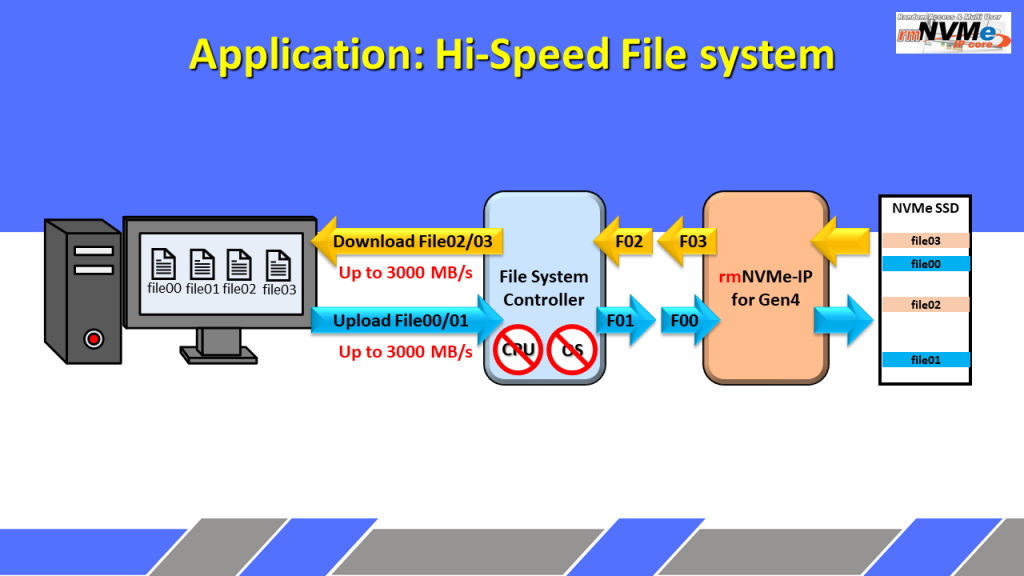

Third, the example application is a High-speed file system.

Typically, the file system device driver in an Operating system must support two key features to ensure efficient data storage and retrieval.

Firstly, the file system must support random access. This allows for data to be split into small blocks and stored in different areas of the storage, maximizing the utilization of available storage space.

Secondly, the file system must support concurrent write and read commands. The file system consists of a file table and user data. While transferring the user data, the file table must be monitored and updated. And most importantly, rmNVMe-IP core can fully handled NVMe protocol internally without CPU or OS.

As a result, the file system can be accessed and updated with high efficiency by supporting concurrent write and read operations.

The rmNVMe-IP is designed to support simultaneous write and read commands at high-speed performance. Up to 3000 Mbytes/sec for mixed write-read command, the high-performance file system by rmNVMe-IP provides a reliable and efficient solution for data storage in both embedded systems and high-performance computing systems.

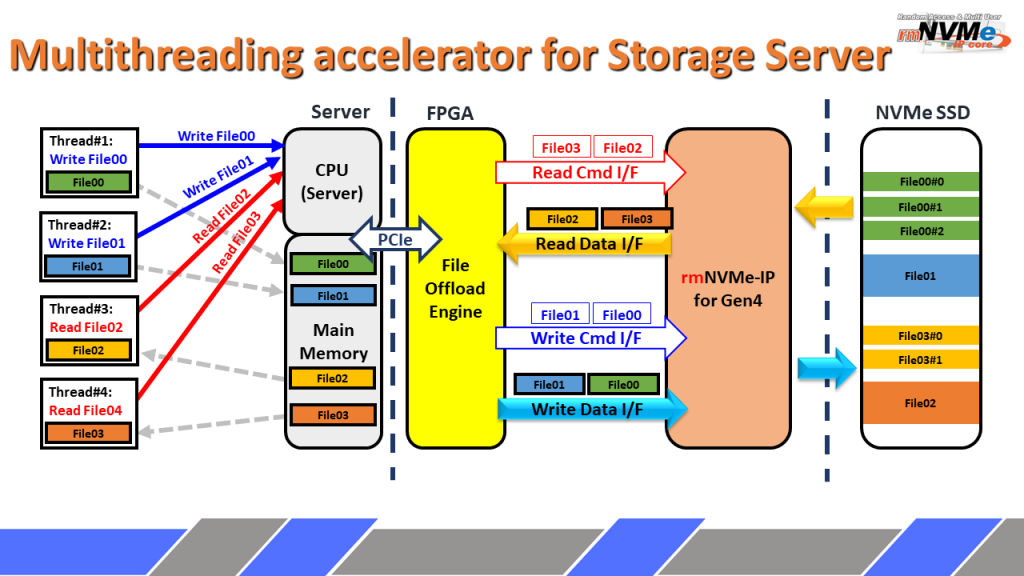

And the last example application in this video is Multithreading for Database Server.

The FPGA can be utilized as the offload engine for the storage server to access physical disks like NVMe SSD storage. In large-scale systems, multiple threads frequently access the storage, leading to a high volume of read and write requests being sent to the CPU.

The write data, which is usually large, is stored in the main memory. The FPGA platform’s PCIe engine provides an interface that allows the offload engine to receive command requests from the CPU and directly access the data in the main memory with high performance. The file offload engine sends read and write requests to the rmNVMe-IP via the Read Command Interface and Write Command Interface, respectively.

All write data is transferred via the Write Data Interface and stored in the NVMe SSD. The read data from the NVMe SSD is returned to the File Offload Engine via the Read Data Interface and finally, returned to the thread through the main memory.

By implementing this kind of hardware system, storage server access can be accelerated with high performance while requiring fewer CPU resources.

Hope you this second part can help you see the opportunity and potential of rmNVMe-IP Core in real-world applications.

For more technical detail, please visit our website.

https://dgway.com/rmNVMe-IP_X_E.html

https://dgway.com/rmNVMe-IP_A_E.html

Youtube: https://youtu.be/RpNV71vpVjE